Previous articles have talked about the understanding of synchronization locks. The way to implement synchronization locks is nothing more than multiple threads seizing a mutually exclusive variable. If the preemption is successful, it means that the lock has been obtained, while threads that have not obtained the lock will block and wait until the lock is obtained. The thread releases the lock

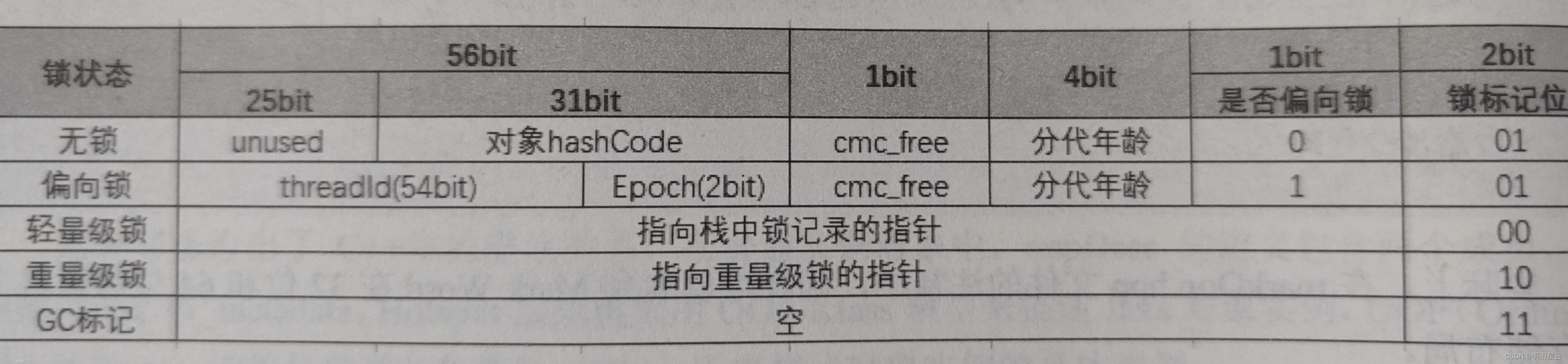

As shown in the figure, in Mark Word, we found that the types of locks include biased locks, lightweight locks, and heavyweight locks, then

In fact, before JDK 1.6, synchronized only provided the mechanism of heavyweight locks. The essence of heavyweight locks is our previous understanding of locks, that is, threads that have not obtained the lock will be blocked through the park method, and then be blocked by the thread that obtained the lock. After waking up, the lock is seized again until the preemption is successful.

Heavyweight locks rely on the Mutex Lock of the underlying operating system to implement, and using Mutex Lock requires suspending the current thread and switching from user mode to kernel mode for execution. The performance overhead caused by this switch is very large. Therefore, how to strike a balance between performance and thread safety is a topic worth discussing.

After JDK1.6, synchronized has made a lot of optimizations, including adding biased locks and lightweight locks to the types of locks. The core design concept of these two locks is how to achieve thread safety without blocking. .

Principle analysis of biased lock

Biased locking can actually be thought of as a locking scenario for accessing a synchronized modified code block without multi-thread competition, that is, in the case of single-thread execution.

Many readers may have questions, if there is no thread competition, then why lock? In fact, for program development, locking is to prevent the risk of thread safety, but whether there is thread competition is not controlled by us, but Depends on the application scenario. Assuming that this situation exists, there is no need to use heavyweight locks based on operating system-level Mutex Lock to achieve lock preemption, which is obviously very performance-consuming.

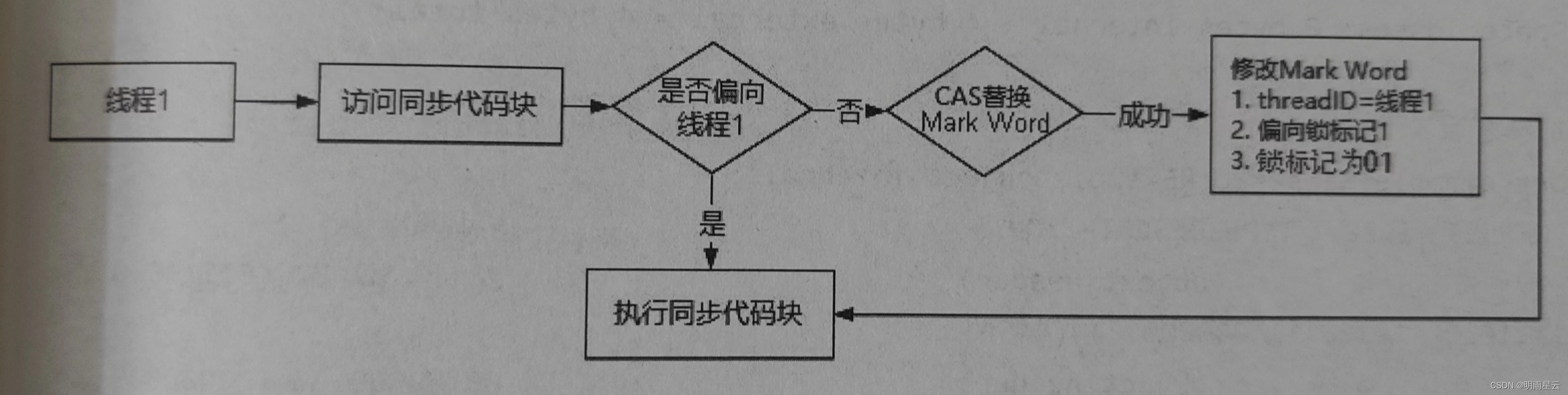

So the role of biased locks is when threads access synchronized synchronized code blocks without thread competition. It will try to preempt access qualifications through biased locks. This preemption process is based on CAS. If the lock is preempted successfully, the lock mark in the object header will be modified directly. Among them, the bias lock mark is 1, the lock mark is 01, and the thread ID that currently obtains the lock is stored. The meaning of bias is that if thread X obtains the bias lock, then when thread x subsequently accesses this synchronization method, it only needs to determine whether the thread ID in the object header and thread X are equal. If they are equal, there is no need to seize the lock again, and you can directly obtain the access qualification. The implementation principle is as shown in the figure.

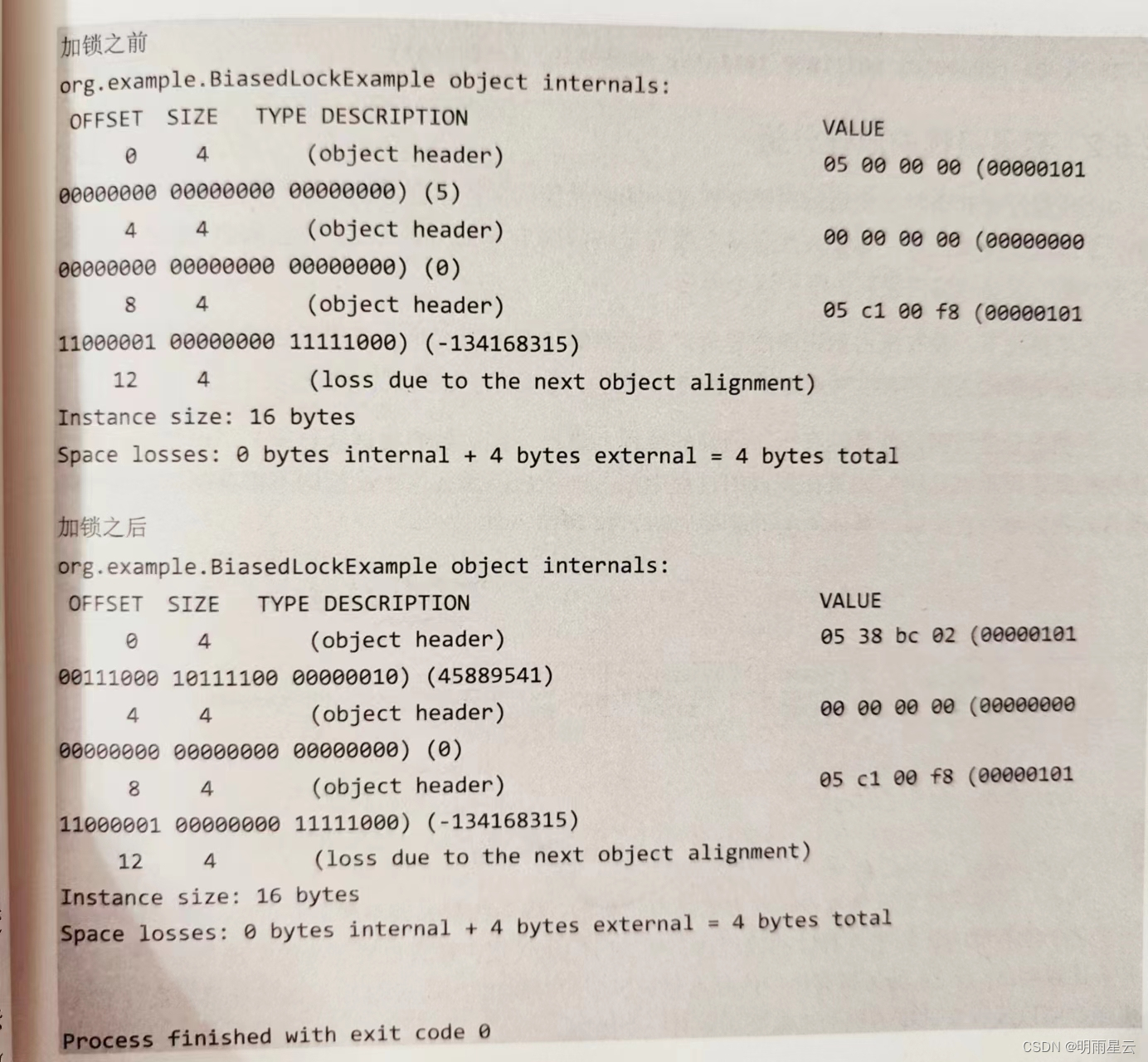

Combining the previous description of the object header part and the principle of biased locking, let’s take a look at the implementation of biased locking through an example.

public class BiasedLockExample {

public static void main(String[] args) {

BiasedLockExample example=new BiasedLockExample();

System.out.println("Before locking");

System.out.println(ClassLayout.parseInstance(example).toPrintable());

synchronized (example){

System.out.println("After locking");

System,out.print1n(ClassLayout .parseInstance(example).toPrintable());

}

}

}

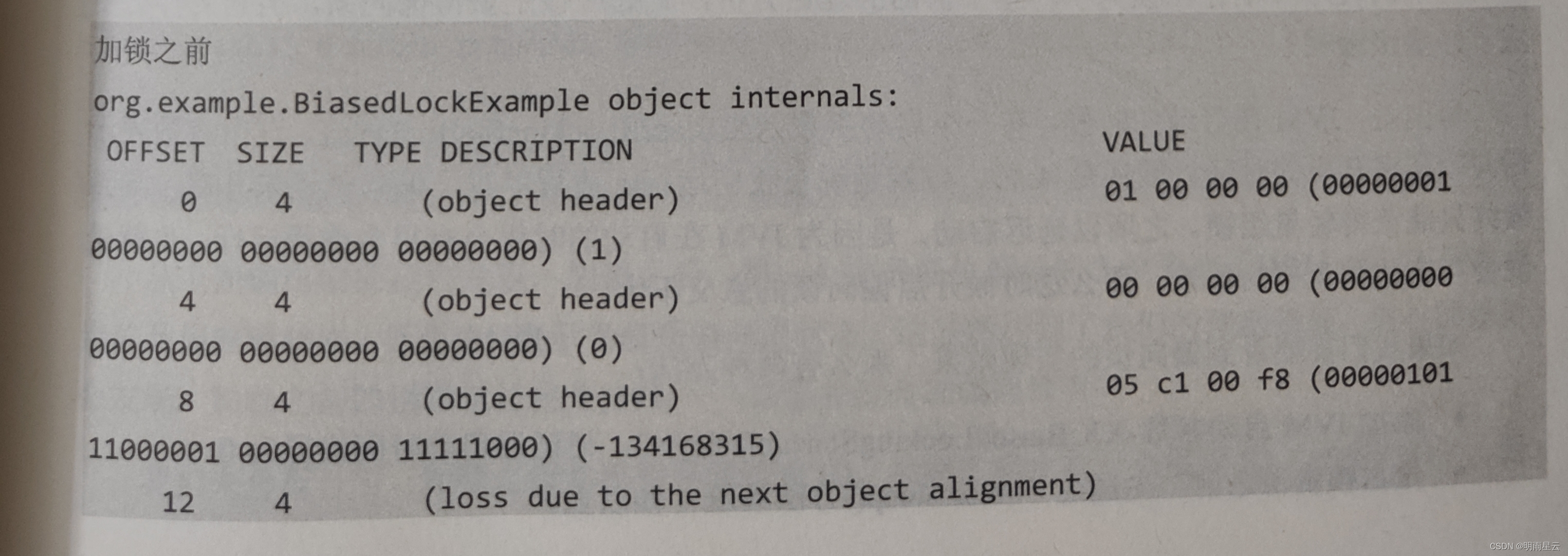

In the above code, BiasedLockExample demonstrates the process of printing the memory layout of the object before and after locking the lock object example. Let’s take a look at the output results.

From the above output results we find:

- Before locking, the last three digits of the first byte 00000001 in the object header are [001], of which the low two digits represent the lock flag. Its value is [01], indicating that the current lock-free state is.

- After locking, the last three digits of the first byte 01111000 in the object header are [000], and the two lower bits are [00]. Compared with the meaning of the storage structure in MarkWord introduced earlier, it represents lightweight lock status.

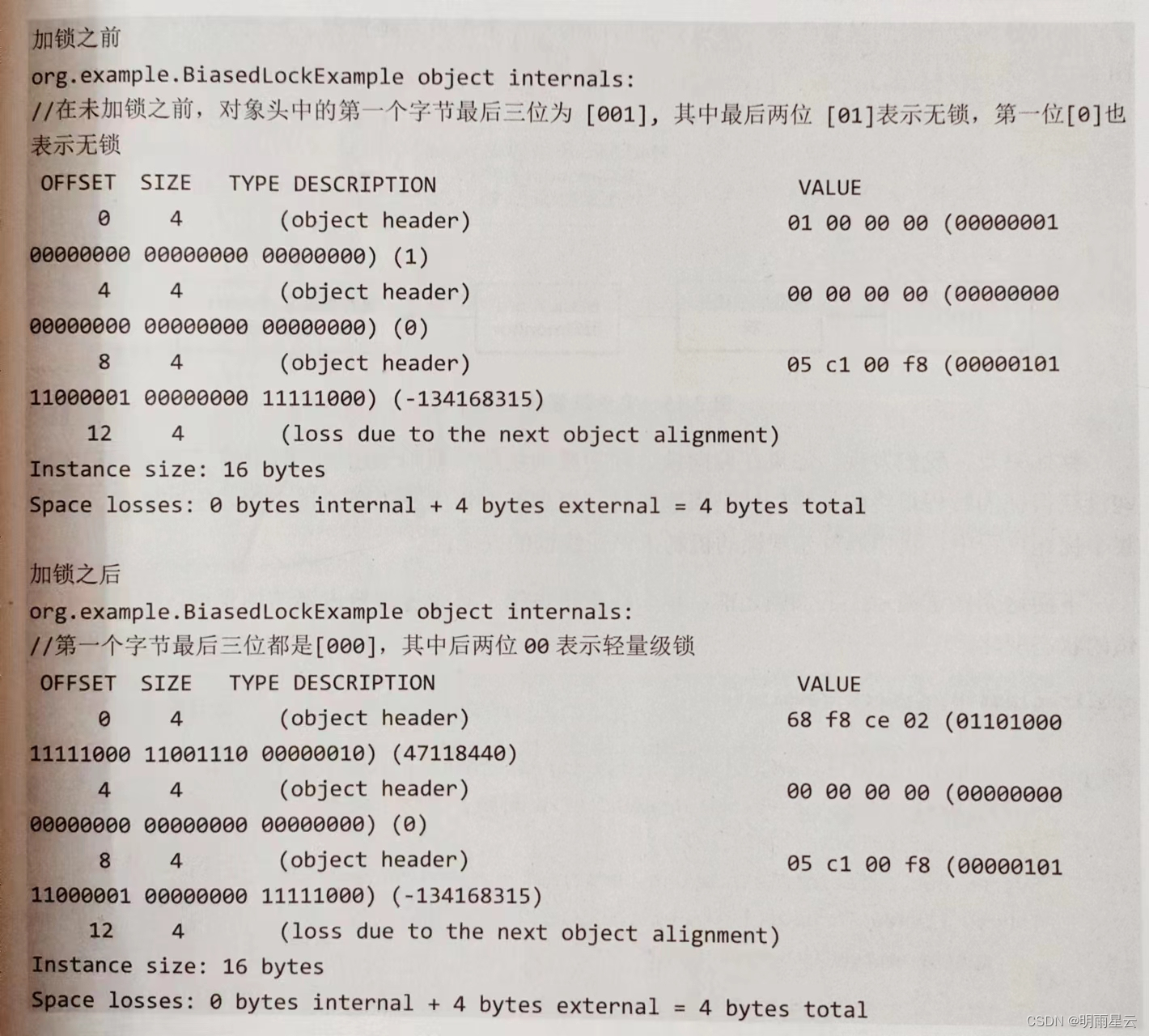

There is no lock competition in the current program. Based on the previous theoretical analysis, a biased lock should be obtained here, but why does it become a lightweight lock?

The reason is that when the JVM is started, there is a startup parameter -XX:BiasedLockingStartupDelay. This parameter indicates the delayed opening time of the biased lock. The default is 4 seconds, which means that when we run the above program, the biased lock has not yet been turned on, resulting in Only lightweight locks can be obtained in the end. The reason for delayed startup is that there will be many threads running when the JVM starts, which means that there will be thread competition scenarios, so it makes little sense to turn on biased locks at this time.

If we need to see the implementation effect of biased locking, there are two methods:

- Add the JVM startup parameter -XX:BiasedLockingStartupDelay=0 and set the delayed startup time to 0.

- Before seizing the lock resource, sleep for more than 4 seconds through the Thread.sleep) method.

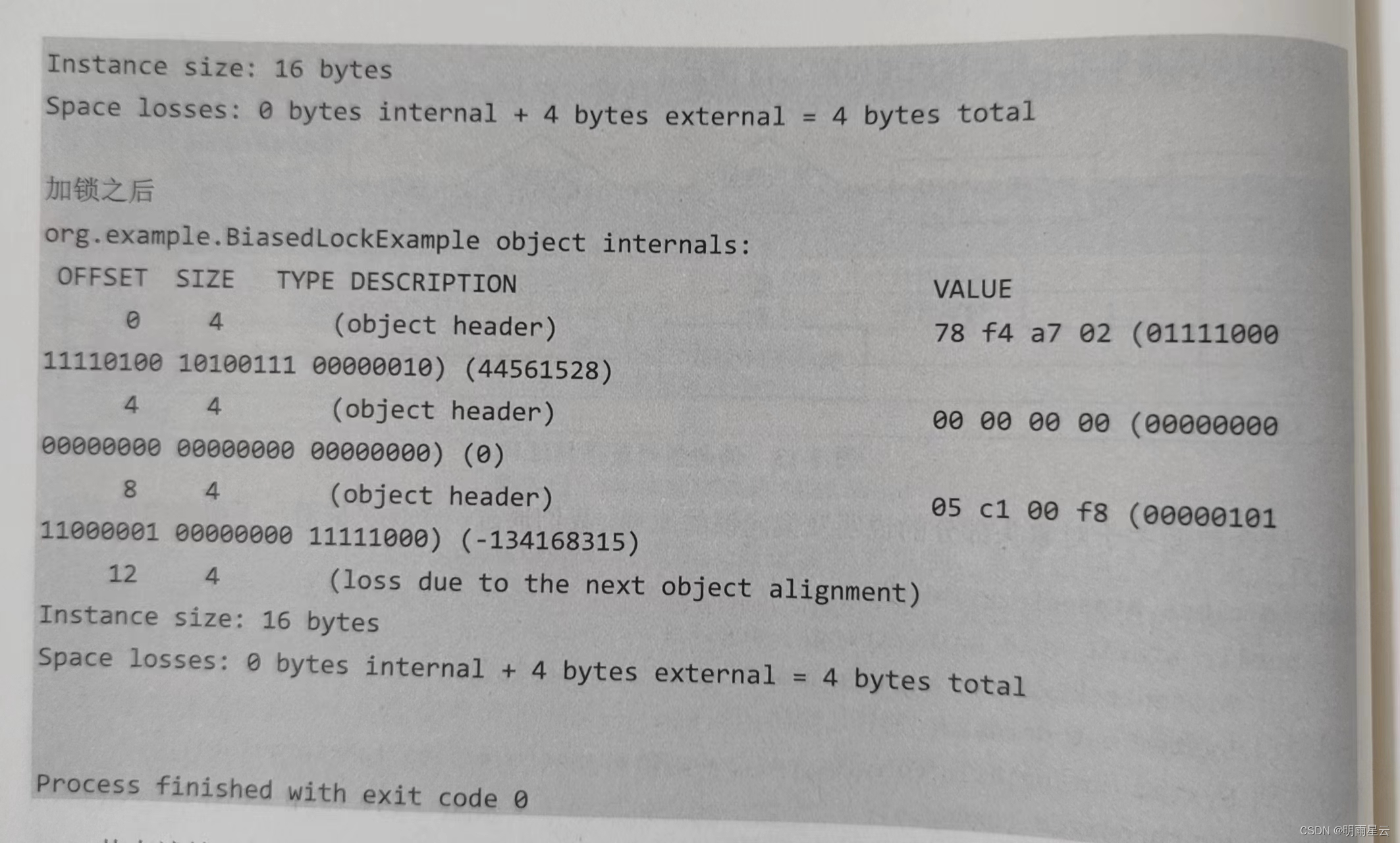

Finally, the following output results are obtained.

From the above output results, we find that after locking, the 3 bits of the low-order part of the first byte become [101]. The high-order bit [1] indicates that the current bias lock state is, and the low bit [01] indicates that the current bias lock state is. This obviously achieved our desired effect. Careful readers will find that the lock mark before locking is also [101] ——– There is no bias lock added here?

Let’s analyze it. The thread ID is not stored before locking, but there is a thread ID (45889541) after locking. Therefore, before obtaining a biased lock, this mark indicates that the current state is biasable, but does not mean that it is already in a biased state.

05 00 00 00 (00000101 000000000000000000000000) (5)

05 38 bc 02 (00000101 001110001011110000000010) (45889541)

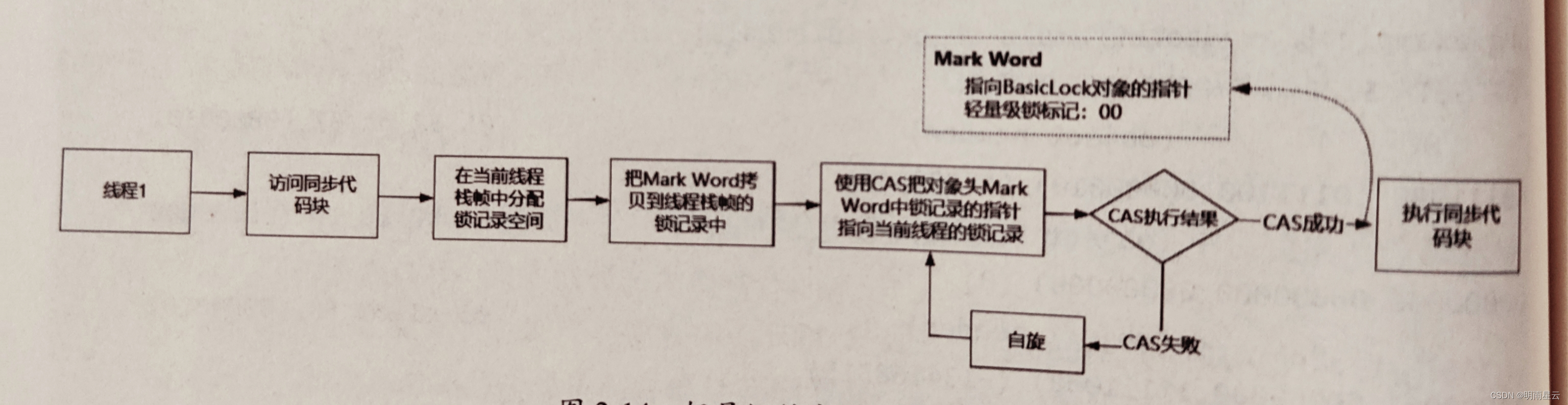

Principle analysis of lightweight locks

When there is no competition among threads, biased locks can be used to obtain lock resources without affecting performance. However, only one thread is allowed to obtain lock resources at the same time. If multiple threads suddenly access the synchronization method, there will be no preemption of the lock resources. What should the thread do? Obviously biased locking cannot solve this problem.

Under normal circumstances, threads that have not preempted the lock must block and wait to be awakened, which means that it is implemented according to the logic of heavyweight locks. But before that, is there a better balanced solution? So there is lightweight Lock design.

The so-called lightweight lock means that the thread that has not preempted the lock will retry (spin) for a certain number of times. For example, if a thread does not grab the lock for the first time, it will retry several times. If the lock is grabbed during the retry process, then the thread does not need to block. This implementation method is called spin lock. The specific implementation process is as follows As shown in the figure.

Of course, there is a cost for threads to seize locks by retrying, because if the thread keeps spinning and retrying, the CPU will always be running. If the thread holding the lock holds the lock for a short time, the performance improvement brought by the implementation of spin waiting will be obvious. On the contrary, if the thread holding the lock occupies the lock resource for a long time, the spinning thread will waste CPU resources, so there must be a limit on the number of times a thread can retry to seize the lock.

The default number of spins in JDK1.6 is 10 times. We can adjust the number of spins through the -XX:PreBlockSpin parameter. At the same time, developers also optimized the spin lock in JDK1.6 and introduced an adaptive spin lock. The number of spins of the adaptive spin lock is not fixed, but based on the previous spin on the same lock. It is determined by the number of spins and the status of the lock holder. If a lock is successfully obtained through spin waiting on the same lock object, and the thread holding the lock is running, then the JVM will think that this spin also has a high chance of obtaining the lock, so it will change the self-time of this thread. The spin time is relatively prolonged. On the other hand, if in a lock object, obtaining the lock through a spin lock is rarely successful, then the JVM will shorten the number of spins.

The demonstration of lightweight lock is shown above. By default, the delayed opening parameter of biased lock is not modified, and the lock status obtained by locking is lightweight lock.

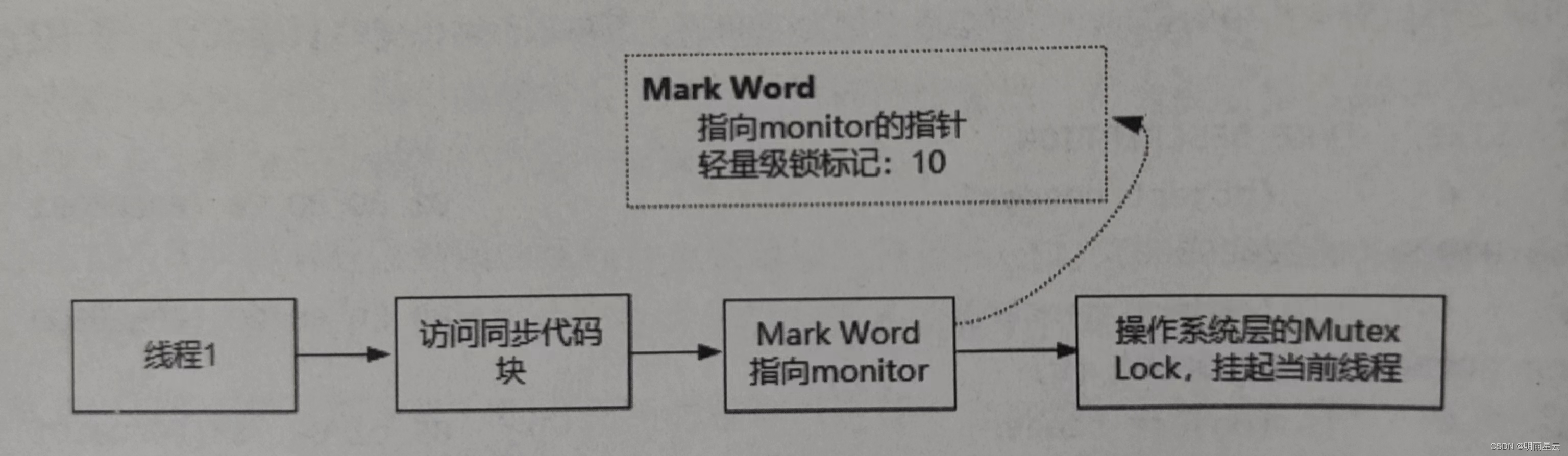

Principle analysis of heavyweight locks

Lightweight locks can make it possible for threads that have not obtained the lock to seize lock resources through a certain number of retries, but lightweight locks

The effect of improving the performance of the synchronization lock can only be achieved when the pin-saving lane has a short time. If the thread holding the lock occupies the lock resource for a long time, you cannot let those threads that have not seized the lock resource continue to spin, otherwise it will occupy too much CPU resources, which is a waste of more gain than loss.

If the thread that has not preempted the lock resource finds that it still has not obtained the lock after a certain number of spins, it can only block and wait, so it will eventually be upgraded to a heavyweight lock and preempt the lock resource through a system-level mutex. The implementation principle of heavyweight lock is shown in the figure.

Overall, we found that if threads cannot obtain lock resources in biased locks and lightweight locks, the final result of these threads that have not obtained the lock will still be blocking and waiting until the thread that obtained the lock releases the lock. be awakened. During the entire optimization process, we ensure thread safety through the optimistic locking mechanism.

The following example demonstrates the state changes of the lock in the object header before locking, in the scenario of a single thread preempting the lock, or multiple threads preempting the lock.

public class HeavyLockExample {

public static void main(String[] args) throws InterruptedException {

HeavyLockExample heavy = newHeavyLockExample();

System,out.println("before locking");

System.out.println(ClassLayout.parseInstance(heavy),toPrintable());

Thread t1=new Thread(()->{

synchronized (heavy){

try{

TimeUnit.SECONDS.sleep(2);

} catch (InterruptedException e) {

e.printstackTrace( );

}

}

});

t1.start();

//Make sure that the t1 thread is already running

TimeUnit.MILLISECONDS.sleep(500);

system.out.println("t1 thread has seized the lock");

System.out,println(ClassLayout.parseInstance(heavy).toPrintable());

synchronized (heavy){

system.out.println("main thread to seize the lock");

system.out.println(ClassLayout.parseInstance(heavy).toprintable());

}

}

}

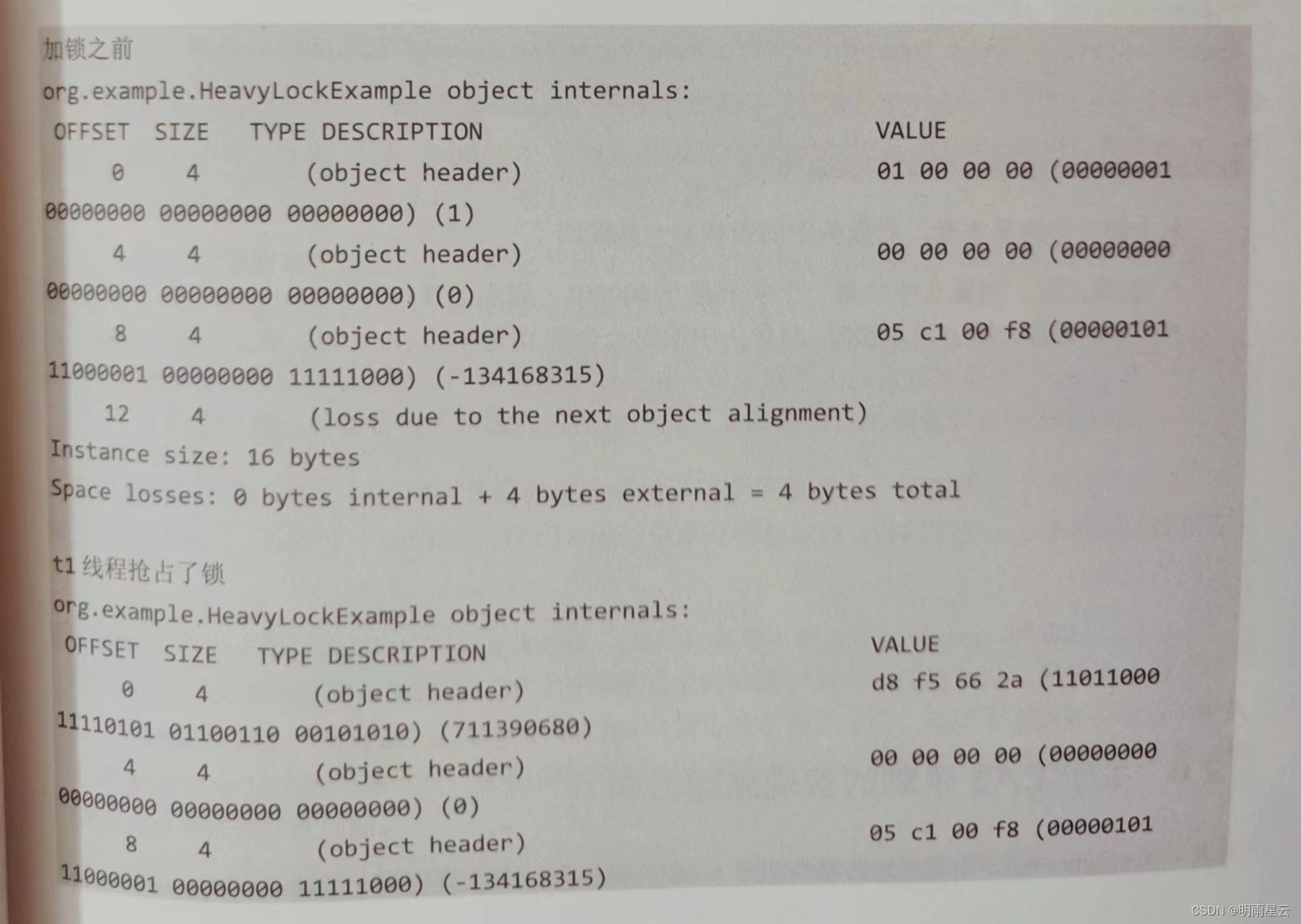

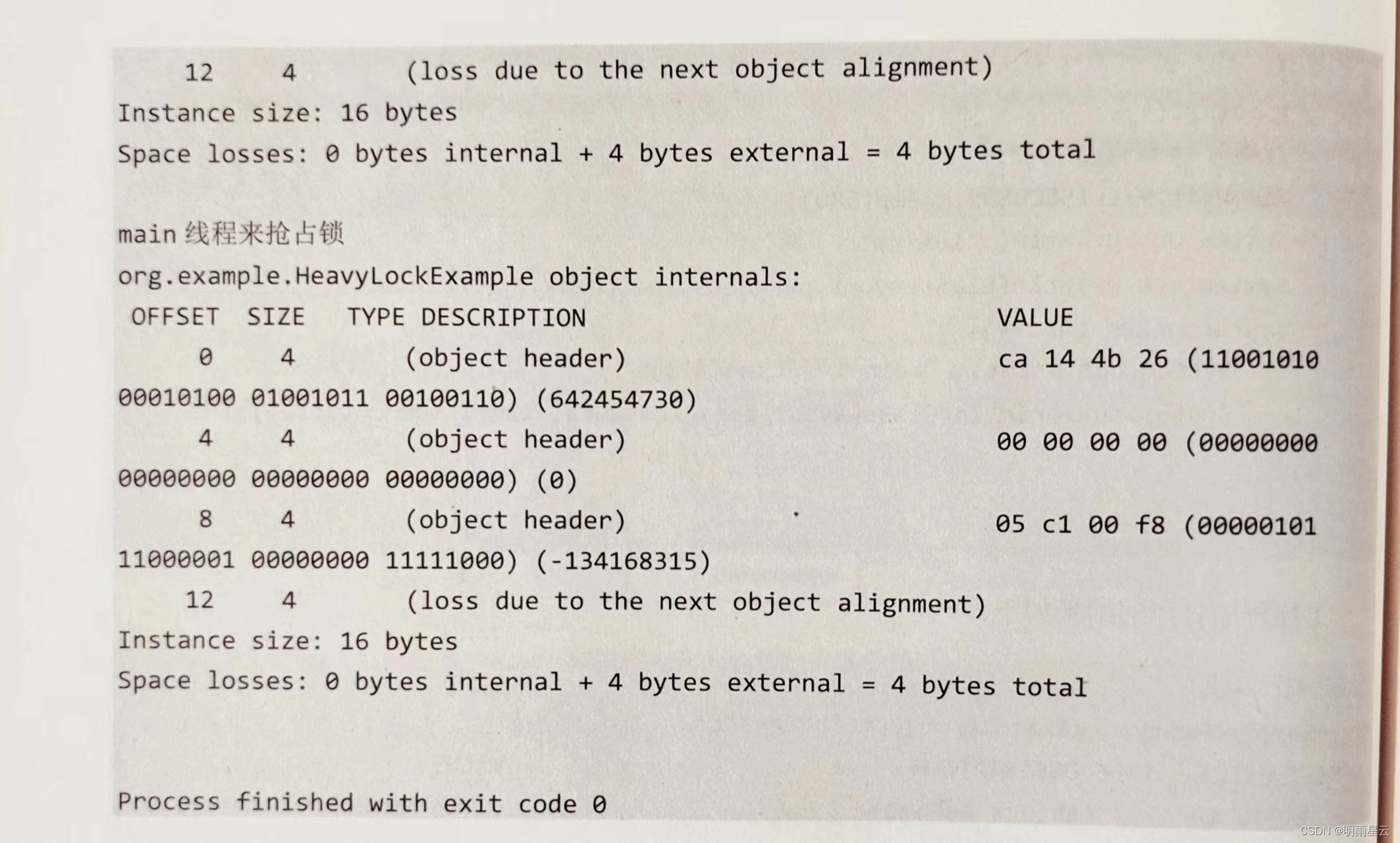

The results printed by the above program are as follows:

Judging from the above print results, the lock status in the object header has experienced a total of three types.

- Before locking, the first byte in the object header is 00000001, indicating a lock-free state.

- When the t1 thread seizes the synchronization lock, the first byte in the object header becomes 11011000, indicating the lightweight lock status.

- Then the main thread seizes the same object lock. Since the t1 thread slept for 2 seconds and the lock has not been released at this time, the main thread cannot obtain the lock through lightweight lock spin, so its lock type is a heavyweight lock. The lock is marked 10.

Note that in this case demonstration, the developer did not turn on the parameter of biased lock. If it is turned on, then the lock status obtained after the first lock should be biased lock, and then directly to the heavyweight lock (because the tI thread has a sleep, so the lightweight lock can

cannot be obtained).

It can be seen from this that the final underlying locking mechanism of synchronized synchronization lock is implemented by gradually upgrading the JVM level according to the competition situation of threads, so as to achieve the purpose of balancing synchronization lock performance and security, and this process does not require developer intervention. .

The knowledge points of the article match the official knowledge files, and you can further learn related knowledge. Java Skill TreeHomepageOverview 138474 people are learning the system