deadlock

It refers to the phenomenon that two or more processes are in a waiting state because they hold each other’s required resources, which causes the program to stop running. Simply put, in a system, if a circular dependency is formed between processes, then a deadlock will occur.

All the codes in this article are implemented in Go. Readers of other languages can use the idea of reading pseudocode to read the code. The main purpose is to clarify the main logic without getting too caught up in the details.

Four necessary conditions

-

Mutual exclusion: Only one process can occupy a resource at a time, and other processes must wait if they want to access the resource

-

Possession wait: The process has held at least one resource and is waiting for another resource. This means that when a process is blocked, it is still holding at least one resource

-

Non-preemptible: The resource cannot be forcibly released, only actively released by the process that occupies it

-

Circular wait: There is a wait circular queue in which each process is waiting for a resource held by the next process

Example code

Deadlock should be the most common “lock exception” scenario encountered in daily development, such as reading and writing to the same channel within a goroutine at the same time:

package main

func main() {

ch := make(chan bool)

ch <- true

<-ch

close(ch)

}

For more scenarios that may cause deadlock, please refer to the previous article [1].

Deadlock handling method

Mainly divided into passive strategy and active strategy.

Passive strategy

Ostrich Strategy

Like an ostrich, bury your head in the sand and pretend nothing happened.

Because the cost of solving the deadlock problem is very high, the ostrich strategy, which does not take task measures, will actually achieve higher performance. When a deadlock does not cause much impact on users, or the probability of a deadlock is very low, the ostrich strategy can be used. Most operating systems, including Unix, Linux, and Windows, deal with the deadlock problem simply by ignoring it.

Active strategy

Deadlock prevention

For the four necessary conditions for deadlock, we can reverse the operation, as long as the four necessary conditions do not occur at the same time, the occurrence of deadlock can be prevented.

1. Destruction of mutual exclusion conditions

Multiple threads are allowed to occupy certain resources at the same time, but in scenarios with mutual exclusion restrictions, resources often need to be monopolized, such as printers and global counters. Problem and solution form a paradox, so the solution is not practical.

2. Destruction Possession Waiting

Implement the resource pre-allocation strategy, and the thread applies to the system for all the resources it needs before running. If all the resources required by a thread cannot be met, no resources are allocated and the thread does not run. When the system can satisfy all the resource requests of the thread, it will allocate all the requested resources to the thread at one time. At this time, the thread has all the resources it needs to run, so there will be no phenomenon of occupying resources and applying for waiting resources at the same time, avoiding the occurrence of deadlock.

3. Destruction cannot be preempted

Threads are allowed to preempt resources. When a thread applies for a new resource but cannot be satisfied immediately, it must release all the resources it occupies, and then re-apply. The resources released by it can be allocated to other threads, which is equivalent to the implicit preemption of the resources occupied by the thread. This method is difficult to implement and will reduce system performance.

4. Breaking the circular wait

Implement orderly allocation of resources, classify, number, and allocate all resources first, so that threads will not form a circular wait when applying for resources. All threads’ requests for resources must be allocated strictly in the order of resource numbers, so that there will be no circular waiting for resources between multiple threads (because each thread can obtain all the resources required for execution), thus preventing deadlocks.

Avoid deadlock

When the program is executed, determine whether there is a deadlock by converting various operations into safe serialized operations, and take preventive measures when it is judged that a deadlock may occur to avoid the occurrence of a deadlock.

Security Status

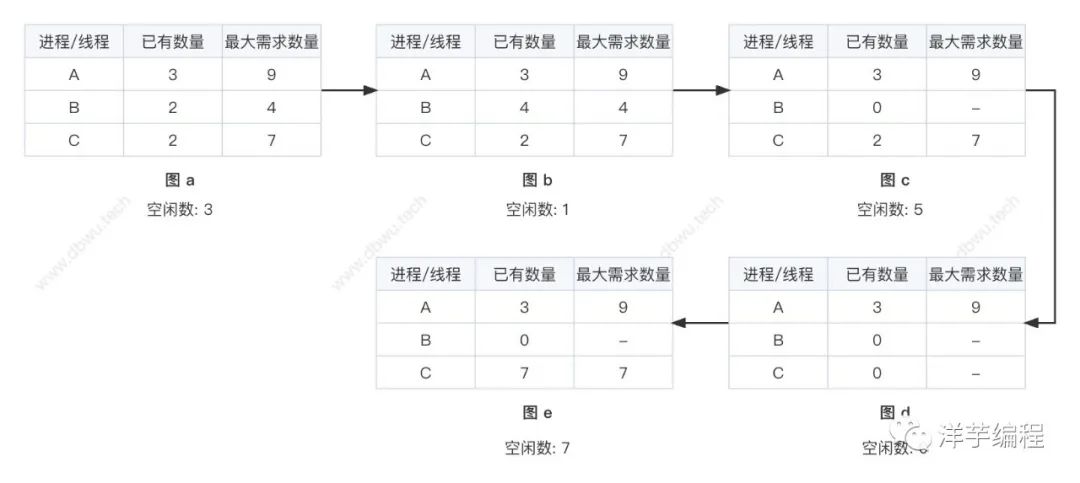

As shown in the figure, the second column of each table indicates the number of resources owned by the thread, the third column indicates the number of all resources required by the thread, and “idle number” indicates the number of resources currently available.

The specific resource allocation process is as follows:

-

In the initial state there are 3 allocatable resources;

-

Assign 2 resources to B, so that B has all the resources needed for execution, and the remaining resources are 3 – 2 = 1;

-

After the execution of B, all its resources are released, and the remaining resources at this time are 1 + 4 = 5;

-

Allocate 5 resources to C, so that C has all the resources needed for execution, and the remaining resources are 5 – 5 = 0;

-

After the execution of C, all its resources are released, and the remaining resources are 0 + 7 = 7;

-

Assign 7 resources to A, let A execute, and the program ends;

In step 4 of the above execution process, if resources are not allocated to C but to A, then the safe state cannot be guaranteed and a deadlock will occur. Interested readers can change the table data to verify the result.

Banker’s Algorithm

A banker in a small town promises a certain loan amount to a group of customers. What the algorithm needs to do is:

Judging whether the loan request will enter an unsafe state after the loan request is passed, if it will not enter an unsafe state, agree to the loan request, otherwise reject the loan request.

The specific resource allocation process is as follows:

-

In the initial state there are 10 allocatable resources;

-

After allocating resources to A, B, C, D, the remaining resources are 10 – 8 = 2;

-

The only option not to go into an unsafe state at this point is to allocate the remaining 2 resources to C ;

-

If assigned to other processes/threads, it will enter an unsafe state and cause deadlock;

-

The banker’s algorithm must be able to correctly detect whether continuing allocation will cause a deadlock, and reject the allocation in advance (the process from Figure b to Figure c);

In the real world, few operating systems use the banker’s algorithm to avoid deadlocks, because few processes know the maximum amount of resources they need to run before they run, and the number of processes is not fixed and is constantly changing. In addition, resources may also become unavailable (for example, printers are offline, disks are damaged, etc.).

Detect deadlock

When the program is executed, the occurrence of deadlock is detected by periodically scanning the resource allocation, and corresponding measures are taken to eliminate the deadlock.

Do not try to prevent deadlocks, but should take steps to recover from deadlocks when they are detected.

1. Deadlock detection for one resource of each type

In the resource allocation shown in the figure, the box represents the resource, the circle represents the process, resource pointing to the process means that the resource has been allocated to the process, and the process pointing to the resource means the process requests to obtain the resource.

Graph b is a cycle graph stripped from graph a, which satisfies the cycle in the deadlock condition, so a deadlock will occur.

Overview of detection algorithm: It is realized by detecting whether there is a directed loop. Starting from a node, DFS (depth-first search) is performed, and the visited nodes are marked. If the marked nodes are visited, it means that there is a loop in the directed graph, that is, a deadlock is detected.

2. Deadlock detection for multiple resources of each type

## A B C D

## Tape Drive Plotter Scanner CD-ROM

E = ( 4 2 3 1 )

A = ( 2 1 0 0 )

_ _

| 0 0 1 0 |

C = | 2 0 0 1 |

| 0 1 2 0 |

- -

_ _

| 2 0 0 1 |

R = | 1 0 1 0 |

| 2 1 0 0 |

- -

In the above code example, there are 3 processes and 4 resources:

-

E vector: Total resources

-

A vector: resource remaining

-

C matrix: the number of resources owned by each process, each row represents the number of resources owned by a process

-

R matrix: number of resources requested by each process

Through the statistics of processes and resources, the current system status can be obtained:

-

The resources requested by processes P1 and P2 cannot be satisfied (scanner and CD-ROM have no remaining resources);

-

The resources requested by process P3 can be fully satisfied;

-

Let the process P3 execute first, and release all its resources after completion;

-

At this time, resource A = (2 2 2 0), process P2 can execute, and release all its resources after completion;

-

At this time resource A = (4 2 2 1), process P1 can execute;

-

All processes are executed and completed without deadlocks;

Overview of the detection algorithm:

Each process is not marked initially, and may be marked during execution. When the algorithm ends, any process that is not marked indicates that a deadlock has occurred internally.

-

Find an unmarked process Pi whose requested resource is less than or equal to A;

-

If the corresponding process is found, add the i-th row vector of matrix C to A, mark the process, and return to the first step to continue execution;

-

If no corresponding process is found, the algorithm execution ends;

Release deadlock

When a deadlock occurs, methods such as killing the process, preempting resources, and rolling back can be used to remove the deadlock.

livelock

Refers to the phenomenon that the thread is unable to obtain the required resources and keeps retrying. Unlike deadlocks, threads in livelocks will not be blocked. They will keep trying to acquire resources, but they will always fail, eventually causing the program to fail to execute normally.

To give a small example in life, if two people cross the road towards each other, if they both yield to one side at the same time, then both of them will be unable to pass, and then they will both move to the other side at the same time, and they will still be unable to pass. If the two people have been moving synchronously without interference from other factors, then the end result is that neither of them has moved forward, resulting in a livelock (the word is very vivid, both of them are locked alive by the object).

How to deal with it

1. Introduce randomness

Introducing a certain degree of randomness in the code can avoid livelocks. For example, in the process of retrying, introduce random sleep time to break the infinite loop, so that threads have the opportunity to release resources and reacquire resources.

2. Introduce system timestamp

By comparing the system time stamps to determine whether the thread needs to continue waiting, because the time stamps (system clocks) obtained by multiple threads cannot be completely consistent, priority can be sorted on the basis of the time stamps, and finally the thread order is scheduled through the sorting.

Example code

The small example just now is converted into code as follows:

package main

import (

"fmt"

"sync"

"sync/atomic"

"time"

)

var (

// Use a mutex semaphore to synchronize

cond = sync. NewCond( & sync. Mutex{})

// Counters representing the left and right directions respectively (the default value is 0)

// That is to say, when two people meet, they will move left or right in order to make way for each other

leftCnt, rightCnt int32

)

// Semaphore lock operation

// Two people must lock when moving direction

func takeStep() {

cond.L.Lock()

cond. Wait()

cond. L. Unlock()

}

// direction to move

func move(name, dir string, cnt *int32) bool {

fmt.Printf("%s went to %v\

", name, dir)

// current direction counter + 1

atomic. AddInt32(cnt, 1)

takeStep()

// If the current counter has only been modified by one person

// Indicates that the person has moved the direction, but the other party has not moved. At this time, you can let the other party go first, and the program can return directly

if atomic. LoadInt32(cnt) == 1 {

// because of livelock

// so the code never gets executed here

fmt.Printf("%s gave way to the opponent successfully \

", name)

return true

}

takeStep()

// current direction counter - 1

atomic. AddInt32(cnt, -1)

return false

}

func giveWay(name string) {

fmt.Printf("%s is trying to give way to the opponent... \

", name)

// Simulate three times the two sides give way to each other

for i := 0; i < 3; i ++ {

if move(name, "left", & amp;leftCnt) || move(name, "right", & amp;rightCnt) {

return

}

}

fmt.Printf("%v said helplessly: We can stop giving way to each other, you pass first!\

", name)

}

func main() {

go func() {

// Notify after 1 millisecond, release the lock

for range time.Tick(1 * time.Millisecond) {

cond. Broadcast()

}

}()

var wg sync.WaitGroup

// Simulate two people

// Xiaoming and Xiaohong

wg. Add(2)

go func() {

defer wg. Done()

giveWay("Xiao Ming")

}()

go func() {

defer wg. Done()

giveWay("Xiaohong")

}()

wg. Wait()

}

Run the above code, you can see that the output result is consistent with the described livelock generation process (the author added some dividing lines to assist reading):

# go run main.go # Your output may not be exactly the same as here, but the logic is consistent # Both sides give way for the first time Xiaohong, try to make way for the other party... Xiaohong went to the left Xiao Ming, try to make way for the other party... Xiao Ming walked to the left -------------------------------- Xiaohong went to the right Xiao Ming walked to the right -------------------------------- # Both sides give way for the second time Xiaohong went to the left Xiao Ming walked to the left Xiaohong went to the right Xiao Ming walked to the right -------------------------------- # Both sides give way for the third time Xiaohong went to the left Xiao Ming walked to the left Xiaohong went to the right Xiao Ming walked to the right -------------------------------- # Both sides completed the third give way Xiaohong said helplessly: We can stop giving way to each other, you go first! Xiao Ming said helplessly: We can stop giving way to each other, you go first!

spin lock

It is an implementation of mutual exclusion lock. When a thread tries to acquire a lock, if it finds that the lock is already occupied by other threads, it will repeatedly try to acquire the lock instead of giving up control of the CPU. This process is called spinning, which can effectively reduce the overhead of thread switching and improve the performance of locks. The spin lock also avoids the scheduling overhead of the process context, so it is effective for thread blocking scenarios in a short period of time.

Example code

The simplest implementation is to continuously try to acquire the lock directly in the loop, the code is as follows:

package main

import "sync"

func main() {

mu := sync.Mutex{}

for !mu. TryLock() {

// After acquiring the lock

// do something

mu. Unlock()

}

}

Of course, the performance of this simple code is really worrying, we can optimize it:

package main

import (

"fmt"

"sync"

"sync/atomic"

)

type spinLock uint32

// Acquire the spin lock

func (sl *spinLock) lock() {

for !atomic.CompareAndSwapUint32((*uint32)(sl), 0, 1) {

// Get the spin lock

}

}

// Release the spin lock

func (sl *spinLock) unlock() {

atomic.StoreUint32((*uint32)(sl), 0)

}

func main() {

var sl spinLock

var wg sync.WaitGroup

for i := 0; i < 10; i ++ {

wg. Add(1)

go func(index int) {

defer wg. Done()

sl. lock()

fmt.Printf("index %d got spin lock\

", index)

sl. unlock()

}(i)

}

wg. Wait()

}

In addition, you can also learn about the practice in the standard library. For example, the spin lock implementation code in the standard library excerpted from [2] in the article “sync.Mutex Design and Implementation”:

// $GOROOT/src/runtime/proc.go

func sync_runtime_canSpin(i int) bool {

if i >= active_spin || ncpu <= 1 || gomaxprocs <= int32(sched.npidle + sched.nmspinning) + 1 {

return false

}

if p := getg().m.p.ptr(); !runqempty(p) {

return false

}

return true

}

Starvation

Starvation, also known as starvation, refers to a situation in which a process cannot execute because it cannot obtain the required resources and is always in a waiting state.

The difference between starvation and deadlock is that deadlock is caused by multiple processes/threads competing for resources, while starvation is caused by a process/thread being unable to obtain resources.

How to deal with it

1. Fair scheduling

Using a fair scheduling algorithm can ensure that each process/thread has the opportunity to obtain the required resources. Especially for CPU scheduling, first-come-first-served or time slice rotation can be used to avoid the situation that a certain process/thread has been occupying resources while other processes/threads cannot be executed.

2. Priority inversion

When a low-priority process/thread occupies the resources required by a high-priority process/thread, the high-priority process/thread will be forced to wait, which may cause it to starve to death. You can use the “priority inheritance” or “priority inversion” method to avoid this from happening.

-

Priority inheritance refers to raising the priority of low-priority processes/threads to the same level as high-priority processes/threads to ensure that they can normally obtain resources;

-

Priority inversion refers to setting the priority of low-priority processes/threads in the middle layer to be higher than the priority of the lowest-priority process/thread to prevent them from being blocked by the lowest-priority process/thread;

The role of priority inversion is to avoid problems that may arise from priority inheritance.

Here is a small example to illustrate this situation:

-

There are currently three processes T1, T2, and T3, T1 is a high-priority process, T2 is an intermediate process, and T3 is a low-priority process;

-

Both T1 and T3 need to access the same resource;

-

At the beginning, T1 obtained the resource, but during this period, T3 entered the running queue, and T2 was also scheduled for execution;

-

Since T1 has been occupying the resource, T2 cannot obtain the required resource and enters the waiting state;

The workaround for priority inversion is:

-

Before T2 is executed, raise the priority of T2 to the current priority of T3 (because T3 has entered the run queue at this time);

-

T2 can get executed even if T1 occupies resources needed by T2;

-

At the end of T2, its priority is restored to the original state to continue waiting for the resources it depends on;

3. Limit waiting time

When the waiting time of a process/thread reaches a certain threshold, the system can forcibly release the resources occupied by the process/thread to ensure that other processes/threads also have the opportunity to obtain these resources.

Example code

We can use two goroutine to simulate the starvation phenomenon:

-

The first goroutine takes up a lot of resources, so the code can be executed more times;

-

The second goroutine takes up very few resources, so the code is executed only a few times or not at all;

package main

import (

"fmt"

"sync"

"time"

)

const (

// The total execution time of a single goroutine

runtime = 1 * time. Second

)

func main() {

var wg sync.WaitGroup

wg. Add(2)

// goroutines that take up too many resources

go func() {

defer wg. Done()

count := 0

for begin := time.Now(); time.Since(begin) <= runtime; {

count ++

// The sleep time is 1 millisecond, the simulation takes up a lot of resources

time.Sleep(1 * time.Millisecond)

}

fmt.Printf("The goroutine that occupies too many resources has executed %d times\

", count)

}()

// goroutines that take up very little resources

go func() {

defer wg. Done()

count := 0

for begin := time.Now(); time.Since(begin) <= runtime; {

count ++

// The sleep time is 10 microseconds, the simulation takes up less resources

time. Sleep(1 * time. Nanosecond)

}

fmt.Printf("The goroutine occupying few resources has been executed %d times\

", count)

}()

wg. Wait()

}

Running the above code, we can see that the goroutine that takes up few resources gets resources (the number of execution times) is much lower than the goroutine that takes up too many resources.

# go run main.go 3,951,200 executions of light goroutines Excessive goroutines executed 997 times

Reference

-

Modern Operating Systems [3]

-

Concurrency in Go[4]

-

Deadlock[5]

-

Spinlock[6]

-

How to understand mutexes, conditional locks, read-write locks and spin locks? [7]

Link

[1]

This previous article: https://dbwu.tech/posts/golang_goroutine_leak/

[2]

sync.Mutex Design and Implementation: https://dbwu.tech/posts/golang_sync_mutex/

[3]

Modern OS: https://book.douban.com/subject/27096665/

[4]

Concurrency in Go: https://book.douban.com/subject/26994591/

[5]

Deadlock: https://en.wikipedia.org/wiki/Deadlock

[6]

Spinlock: https://en.wikipedia.org/wiki/Spinlock

[7]

How to understand mutexes, conditional locks, read-write locks and spin locks? : https://www.zhihu.com/question/66733477

If you want to learn more about Go, please scan the followingFollow the official account,Reply to the keyword [actual combat group] , you will have the opportunity to communicate with us in the group

Share, watch and likeGo