?About the author: A Matlab simulation developer who loves scientific research. He cultivates his mind and improves his technology simultaneously. For cooperation on MATLAB projects, please send a private message.

Personal homepage: Matlab Research Studio

Personal credo: Investigate things to gain knowledge.

For more complete Matlab code and simulation customization content, click

Intelligent optimization algorithm Neural network prediction Radar communication Wireless sensor Power system

Signal processing Image processing Path planning Cellular automaton Drone

? Content introduction

In today’s data-driven world, data classification is a crucial task. By classifying data, we can derive valuable information and insights from it to make more informed decisions. In the field of machine learning, there are many different algorithms and techniques used for data classification, including neural networks.

A neural network is a computational model that mimics the human brain’s nervous system and consists of multiple neurons that pass information through connections. Neural networks have achieved remarkable success in various fields, especially in image and speech recognition. However, traditional neural networks require a large amount of computing resources and time during the training process, and are prone to falling into local optimal solutions.

In order to overcome these problems, a new neural network algorithm has emerged in recent years, namely Extreme Learning Machine (ElM). ElM is a single-layer feedforward neural network in which the weights and biases of the hidden layers are randomly initialized, while the weights of the output layer are calculated by the least squares method. Compared with traditional neural networks, ElM has faster training speed and better generalization ability.

However, ElM still has some challenges when dealing with complex data classification problems. In order to further improve the performance of ElM, we can use genetic algorithms to optimize ElM. Genetic algorithm is an optimization algorithm that simulates the natural evolution process and searches for optimal solutions through genetic operations (such as selection, crossover, and mutation). By combining genetic algorithms with ElM, we can find better configurations of weights and biases, thereby improving ElM’s classification accuracy.

The process of optimizing the ElM neural network can be divided into the following steps:

-

Data preparation: First, we need to prepare the data sets for training and testing. The dataset should contain labeled samples and should be preprocessed to remove noise and unnecessary features.

-

ElM model construction: Next, we need to build the ElM neural network model. ElM includes input layer, hidden layer and output layer. The number of neurons in the hidden layer can be adjusted according to the complexity of the problem.

-

Genetic algorithm optimization: Then, we use genetic algorithm to optimize the ElM. The parameter settings and operation selection of the genetic algorithm will have an important impact on the optimization results. We can choose the best parameters and operations through techniques such as cross-validation.

-

Training and testing: Next, we use the optimized ElM model to train on the training data and use the test data for evaluation. By comparing predicted results with actual labels, we can calculate classification accuracy and other evaluation metrics.

Through the above steps, we can implement data classification based on the ElM neural network optimized by genetic algorithm. This method has better performance when processing complex data sets and can provide high-accuracy classification results.

To sum up, ElM is an effective neural network algorithm that randomly initializes the hidden layer weights and biases and calculates the output layer weights through the least squares method. However, to further improve the performance of ElM, we can use genetic algorithms for optimization. By combining the genetic algorithm with ElM, we can find better weight and bias configurations, thereby improving the classification accuracy of ElM. This ElM neural network method based on genetic algorithm optimization has broad application prospects in data classification and can help us obtain more accurate and useful information from data.

? Part of the code

% BS2RV.m - Binary string to real vector</code><code>%</code><code>% This function decodes binary chromosomes into vectors of reals. The</code><code>% chromosomes are seen as the concatenation of binary strings of given</code><code>% length, and decoded into real numbers in a specified interval using</code><code>% either standard binary or Gray decoding.</code><code>%</code><code>% Syntax: Phen = bs2rv(Chrom,FieldD)</code><code>%</code><code>% Input parameters:</code><code>%</code><code>% Chrom - Matrix containing the chromosomes of the current</code><code>% population. Each line corresponds to one</code><code>% individual's concatenated binary string</code><code>% representation . Leftmost bits are MSb and</code><code>% rightmost are LSb.</code><code>%</code><code>% FieldD - Matrix describing the length and how to decode</code><code>% each substring in the chromosome. It has the</code><code>% following structure:</code><code>%</code><code>% [len; (num)</code><code>% lb; (num)</code><code>% ub; (num)</code><code>% code; (0=binary | 1=gray)</code><code>% scale; ( 0=arithmetic | 1=logarithmic)</code><code>% lbin; (0=excluded | 1=included)</code><code>% ubin]; (0=excluded | 1=included)</code><code>%</code><code>% where</code><code>% len - row vector containing the length of</code><code>% each substring in Chrom. sum(len)</code><code>% should equal the individual length.</code><code>% lb,</code><code>% ub - Lower and upper bounds for each</code><code>% variable. </code><code>% code - binary row vector indicating how each</code><code>% substring is to be decoded.</code><code>% scale - binary row vector indicating where to</code><code> % use arithmetic and/or logarithmic</code><code>% scaling.</code><code>% lbin,</code><code>% ubin - binary row vectors indicating whether</code><code>% or not to include each bound in the</code><code>% representation range</code><code>%</code><code>% Output parameter:</code><code>%</code><code>% Phen - Real matrix containing the population phenotypes.</code><code>?</code><code>%</code><code>% Author: Carlos Fonseca, Updated: Andrew Chipperfield</code><code>% Date: 08/06/93, Date: 26-Jan-94</code><code>?</code><code>function Phen = bs2rv(Chrom,FieldD)</code><code>? </code><code>% Identify the population size (Nind)</code><code>% and the chromosome length (Lind)</code><code>[Nind,Lind] = size(Chrom);</code><code>% code><code>?</code><code>% Identify the number of decision variables (Nvar)</code><code>[seven,Nvar] = size(FieldD);</code><code>?</code><code>if seven ~= 7</code><code> error('FieldD must have 7 rows.');</code><code>end</code><code>?</code> <code>% Get substring properties</code><code>len = FieldD(1,:);</code><code>lb = FieldD(2,:);</code><code>ub = FieldD( 3,:);</code><code>code = ~(~FieldD(4,:));</code><code>scale = ~(~FieldD(5,:));</code><code>lin = ~(~FieldD(6,:));</code><code>uin = ~(~FieldD(7,:));</code><code>?</code><code> % Check substring properties for consistency</code><code>if sum(len) ~= Lind,</code><code> error('Data in FieldD must agree with chromosome length');</code><code> end</code><code>?</code><code>if ~all(lb(scale).*ub(scale)>0)</code><code> error('Log-scaled variables must not include 0 in their range');</code><code>end</code><code>?</code><code>% Decode chromosomes</code><code>Phen = zeros(Nind,Nvar);</code><code>?</code><code>lf = cumsum(len);</code><code>li = cumsum([1 len]);</code><code>Prec = .5 . ^ len;</code><code>?</code><code>logsgn = sign(lb(scale));</code><code>lb(scale) = log( abs(lb(scale)) ) ;</code><code>ub(scale) = log( abs(ub(scale)) );</code><code>delta = ub - lb;</code><code>?</code><code>Prec = .5 .^ len;</code><code>num = (~lin) .* Prec;</code><code>den = (lin + uin - 1) .* Prec;</code><code>?</code><code>for i = 1:Nvar,</code><code> idx = li(i):lf(i);</code><code> if code(i) % Gray decoding</code><code> Chrom(:,idx)=rem(cumsum(Chrom(:,idx)')',2);</code><code> end</code><code> Phen (:,i) = Chrom(:,idx) * [ (.5).^(1:len(i))' ];</code><code> Phen(:,i) = lb(i) + delta(i) * (Phen(:,i) + num(i)) ./ (1 - den(i));</code><code>end</code><code>?</code><code>expand = ones(Nind,1);</code><code>if any(scale)</code><code> Phen(:,scale) = logsgn(expand,:) .* exp(Phen(: ,scale));</code><code>end</code><code>

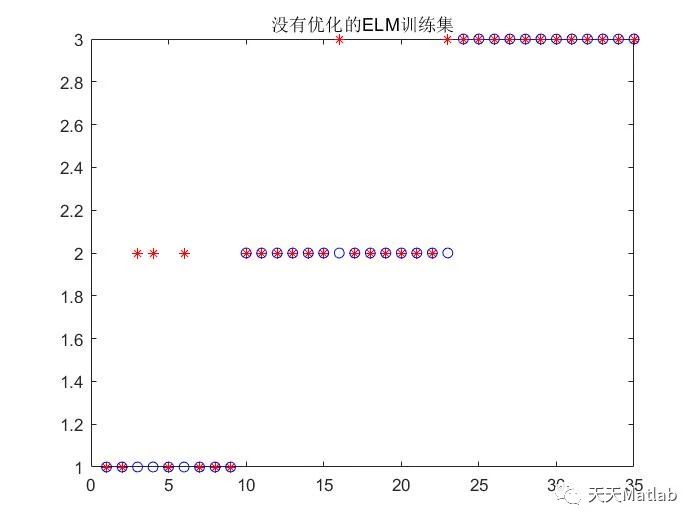

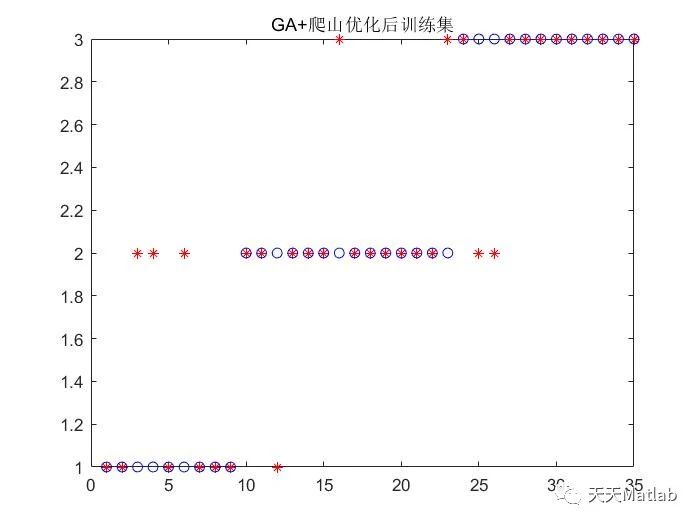

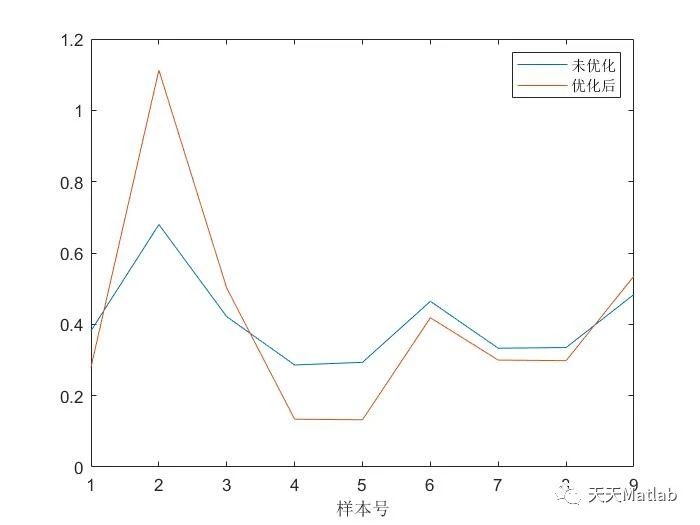

? Run results

? References

[1] Liu Zinuo. Stock price prediction model based on vulture search algorithm and extreme learning machine [J]. Chinese Management Informatization, 2022, 25(22):157-160.

[2] Xu Cui. Research on improved extreme learning machine sub-health identification algorithm [D]. Liaoning University, 2016.

[3] Qin Ling, Wang Dongxing, Shi Mingquan, et al. Indoor visible light positioning system based on genetic algorithm optimization of ELM neural network [J]. China Laser, 2022, 49(21):10.DOI:10.3788/CJL202249.2106001.

[4] Yao Peng. Weight learning in weighted ELM classification model based on genetic algorithm [D]. Shenzhen University, 2018.

Some theories are quoted from online literature. If there is any infringement, please contact the blogger to delete it

Follow me to receive massive matlab e-books and mathematical modeling materials

Complete code and data acquisition via private message and real customization of paper data simulation

1 Improvements and applications of various intelligent optimization algorithms

Production scheduling, economic scheduling, assembly line scheduling, charging optimization, workshop scheduling, departure optimization, reservoir scheduling, three-dimensional packing, logistics location selection, cargo space optimization, bus scheduling optimization, charging pile layout optimization, workshop layout optimization, Container ship stowage optimization, water pump combination optimization, medical resource allocation optimization, facility layout optimization, visible area base station and drone site selection optimization

2 Machine learning and deep learning

Convolutional neural network (CNN), LSTM, support vector machine (SVM), least squares support vector machine (LSSVM), extreme learning machine (ELM), kernel extreme learning machine (KELM), BP, RBF, width Learning, DBN, RF, RBF, DELM, XGBOOST, TCN realize wind power prediction, photovoltaic prediction, battery life prediction, radiation source identification, traffic flow prediction, load prediction, stock price prediction, PM2.5 concentration prediction, battery health status prediction, water body Optical parameter inversion, NLOS signal identification, accurate subway parking prediction, transformer fault diagnosis

2. Image processing

Image recognition, image segmentation, image detection, image hiding, image registration, image splicing, image fusion, image enhancement, image compressed sensing

3 Path planning

Traveling salesman problem (TSP), vehicle routing problem (VRP, MVRP, CVRP, VRPTW, etc.), UAV three-dimensional path planning, UAV collaboration, UAV formation, robot path planning, raster map path planning , multimodal transportation problems, vehicle collaborative UAV path planning, antenna linear array distribution optimization, workshop layout optimization

4 UAV applications

UAV path planning, UAV control, UAV formation, UAV collaboration, UAV task allocation, and online optimization of UAV safe communication trajectories

5 Wireless sensor positioning and layout

Sensor deployment optimization, communication protocol optimization, routing optimization, target positioning optimization, Dv-Hop positioning optimization, Leach protocol optimization, WSN coverage optimization, multicast optimization, RSSI positioning optimization

6 Signal processing

Signal recognition, signal encryption, signal denoising, signal enhancement, radar signal processing, signal watermark embedding and extraction, EMG signal, EEG signal, signal timing optimization

7 Power system aspects

Microgrid optimization, reactive power optimization, distribution network reconstruction, energy storage configuration

8 Cellular Automata

Traffic flow, crowd evacuation, virus spread, crystal growth

9 Radar aspect

Kalman filter tracking, track correlation, track fusion

The knowledge points of the article match the official knowledge files, and you can further learn related knowledge. Algorithm skill tree Home page Overview 52,180 people are learning the system