What is the thundering herd effect?

When I first heard this term, I thought it was very interesting. I didn’t know what it meant. I always thought it was caused by the strange Chinese translation.

Complex said (from the Internet) TLDR;

The thundering herd effect refers to when multiple processes (multi-threads) are blocked and waiting for the same event at the same time (sleep state). If the event that is being waited for occurs, then it will wake up all the waiting processes (or threads). But in the end, only one process (thread) can obtain “control” at this time and process the event, while other processes (threads) fail to obtain “control” and can only re-enter the sleep state. This phenomenon is similar to The waste of performance is called the thundering herd effect.

To put it simply (my vernacular)

A thunder struck and woke up many people, but only one of them went to collect the clothes. That is to say: a request comes and wakes up many processes, but only one of them can finally handle it.

Cause & amp;Problem

It’s actually simple to say. Most of the time, in order to improve the application’s request processing capabilities, multiple processes (multithreads) are used to listen for requests. When a request comes, they are all awakened because they have the ability to handle it.

The problem is that in the end only one process can handle it. When there are too many requests, it keeps waking up, sleeping, waking up, sleeping, doing a lot of useless work, and context switching is tiring, right? So how to solve this problem? The following is the focus of today. Let’s see how nginx solves this problem.

Nginx architecture

The first point is that we need to understand the general architecture of nginx. nginx divides processes into two categories: master and worker. A very common M-S strategy is that the master is responsible for overall management of workers. Of course, it is also responsible for starting, reading configuration files, monitoring and processing various signals, etc.

However, the first problem to pay attention to arises. The master has and only these tasks. It does not care about the requests. As shown in the figure, the requests are processed directly by the workers. In this case, which worker should the request be processed by? How does the worker handle requests internally?

Nginx uses epoll

Next we need to know how nginx uses epoll to process requests. The following may involve some source code content, but don’t worry, you don’t need to understand them all, you just need to know their functions. By the way, I will briefly describe how I found the location of these source codes.

Master’s job

In fact, the Master does not do nothing, at least it occupies the port. https://github.com/nginx/nginx/blob/b489ba83e9be446923facfe1a2fe392be3095d1f/src/core/ngx_connection.c#L407C13-L407C13

ngx_open_listening_sockets(ngx_cycle_t *cycle)

{

.....

for (i = 0; i < cycle->listening.nelts; i + + ) {

.....

if (bind(s, ls[i].sockaddr, ls[i].socklen) == -1) {

if (listen(s, ls[i].backlog) == -1) {

}

Then, according to our nginx.conf configuration file, we can see which port needs to be monitored, so we go to bind. There is no problem here.

[Discover the source code] Here I directly searched for the bind method in the code, because I know that no matter what you do, you always have to bind the port.

Then there is the creation of workers, which is inconspicuous but crucial. https://github.com/nginx/nginx/blob/b489ba83e9be446923facfe1a2fe392be3095d1f/src/os/unix/ngx_process.c#L186

ngx_spawn_process(ngx_cycle_t *cycle, ngx_spawn_proc_pt proc, void *data,

char *name, ngx_int_t respawn)

{

....

pid = fork();

[Discover the source code] Here I directly searched for fork. There are only two places where fork is needed in the entire project, and I quickly found the worker.

Since it is created by fork, a copy of the task_struct structure is copied. So it has almost everything in master.

Worker’s job

Nginx has a modular idea, which divides different functions into different modules, and epoll is naturally in ngx_epoll_module.c

https://github.com/nginx/nginx/blob/b489ba83e9be446923facfe1a2fe392be3095d1f/src/event/modules/ngx_epoll_module.c#L330C23-L330C23

ngx_epoll_init(ngx_cycle_t *cycle, ngx_msec_t timer)

{

ngx_epoll_conf_t *epcf;

epcf = ngx_event_get_conf(cycle->conf_ctx, ngx_epoll_module);

if (ep == -1) {

ep = epoll_create(cycle->connection_n / 2);

Nothing else is important, not even epoll_ctl and epoll_wait. What you need to know here is that from the call link point of view, it is the epoll object created by the worker, that is, each worker has its own epoll object, and the listening sokcet is the same!

[Discover the source code] This is more direct, search for epoll_create and you will definitely find it.

The key to the problem

At this point, you can basically understand the key to the problem. Each worker has processing capabilities. Who should be awakened when a request comes? To be fair, doesn’t all epoll have events and all workers accept requests? Obviously this won’t work. So how does nginx solve it?

Related video recommendations

Nginx source code analysis of thundering group scheme, demonstration of thundering group phenomenon, and explanation of lock scheme

How can I read the 160,000 lines of nginx source code? Comprehensive analysis of nginx mechanism

The principle and use of epoll, how is epoll better than select/poll?

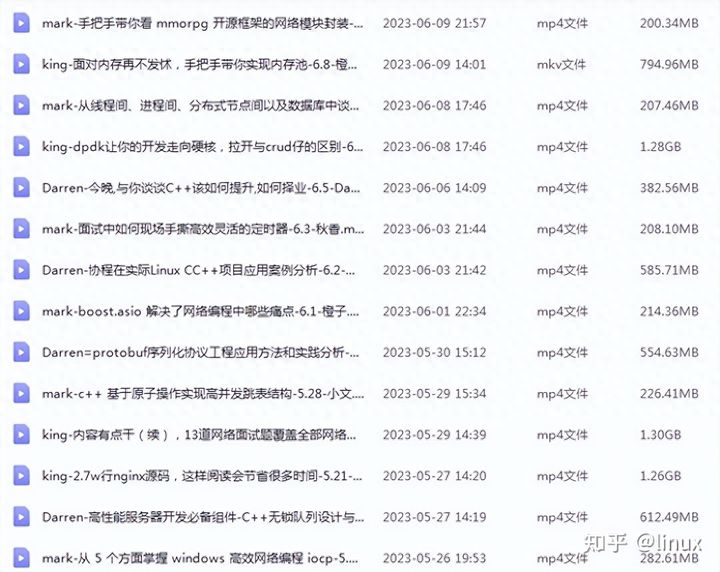

Linux C/C++ development (backend/audio/video/game/embedded/high-performance network/storage/infrastructure/security)

You need C/C++ Linux server architect learning materials and add qun812855908 to obtain them (materials include C/C++, Linux, golang technology, Nginx, ZeroMQ, MySQL, Redis, fastdfs, MongoDB, ZK, streaming media, CDN, P2P, K8S, Docker, TCP/IP, coroutine, DPDK, ffmpeg, etc.), free sharing

How to solve

There are three solutions. Let’s look at them one by one:

-

accept_mutex (application layer solution)

-

EPOLLEXCLUSIVE (kernel-level solution)

-

SO_REUSEPORT (kernel-level solution)

accept_mutex

You may know it when you see mutex, it’s locked! This is also a “basic operation” for high-concurrency processing. Locking is necessary when something goes wrong. Yes, locking can definitely solve the problem. https://github.com/nginx/nginx/blob/b489ba83e9be446923facfe1a2fe392be3095d1f/src/event/ngx_event_accept.c#L328

The specific code will not be shown. There are many details, but the essence is easy to understand. When a request comes, whoever gets the lock will handle it. If you don’t get it, leave it alone. The locking issue is straightforward. There’s nothing wrong with it except that it’s slow, but at least it’s fair.

EPOLLEXCLUSIVE

EPOLLEXCLUSIVE is a new epoll flag added to the 4.5+ kernel in 2016. It reduces the probability of panic caused by multiple processes/threads adding shared fd through epoll_ctl, so that when an event occurs, only one process that is blocking epoll_wait waiting to be awakened is awakened (instead of all awakening).

The key is: each time the kernel wakes up only one sleeping process to process resources

However, this solution is not a perfect solution, it only reduces the probability. Why do you say this way? Compared with the original awakening of all, it is definitely a lot better and reduces the conflict. However, since the socket is essentially shared, the time when the current process is completed is uncertain. The process that is awakened later may find that the current socket has been processed by the process that was awakened before.

SO_REUSEPORT

Nginx added this feature in version 1.9.1 https://www.nginx.com/blog/socket-sharding-nginx-release-1-9-1/

Its essence is to take advantage of the reuseport feature of Linux. Using the reuseport kernel allows multiple processes to listen to sockets on the same port, and performs load balancing from the kernel level, waking up one of the processes each time.

The reflection on Nginx is that each Worker process creates an independent listening socket and listens to the same port. When accepting, only one process will get the connection. The effect is the same as shown in the picture below.

The usage method is:

http {

server {

listen 80 reuseport;

server_name localhost;

#...

}

}

Judging from the official test results, it is indeed powerful.

Of course, as the saying goes: there is no absolute, and there is no silver bullet in technology. The problem with this solution is that the kernel doesn’t know whether you are busy or not. I will just throw it to you without thinking. Compared with the previous lock grabbing, the lock grabbing process must not be busy. Now the work at hand is too busy and there is no chance to grab the lock; and this solution may cause that if the current process is too busy, It will still be sent to you as soon as it is your turn according to the load rules of reuseport, so some requests will be stuck by the previous slow request.

Summary

This article starts from understanding the “thundering herd effect” to the principles of nginx architecture and epoll processing, and finally analyzes three different solutions for dealing with the “thundering herd effect”. After analyzing this, I think you should understand that the multi-queue service model of nginx actually has some problems, but it is completely sufficient for most scenarios.