Technical background

When we were developing audio and video modules for the Android platform, we encountered such a problem. Manufacturers wanted to pull the RTSP streams from Hikvision, Dahua and other cameras, and then send the decoded YUV or RGB data back to them, and they would make videos. After analysis or processing, it is then delivered to the lightweight RTSP service module or RTMP push module to implement the processed data and secondary forwarding. This article takes pulling the RTSP stream, parsing it and then injecting the lightweight RTSP service as an example to introduce it. Approximate technical implementation.

Technical implementation

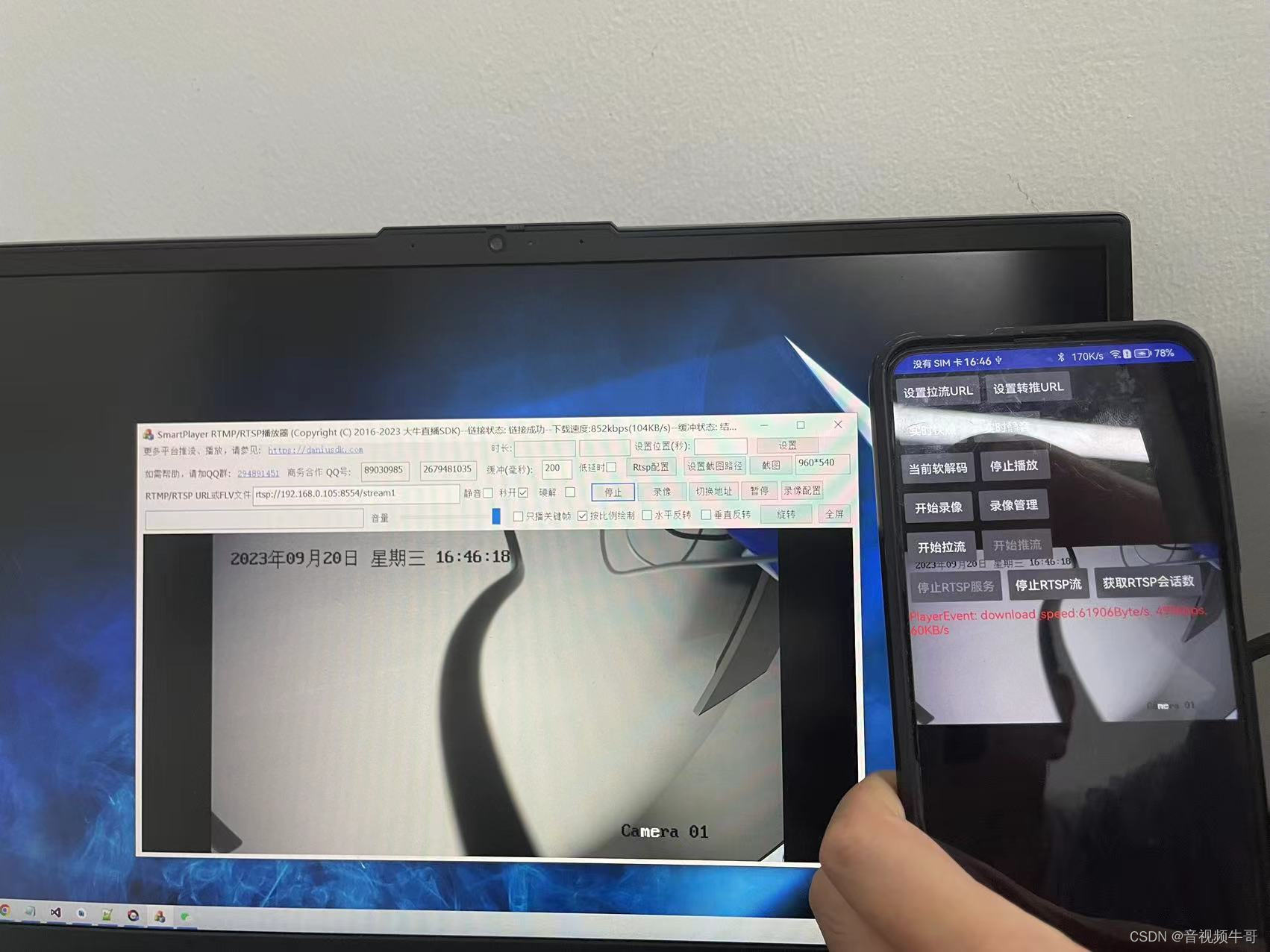

Without further ado, there is no truth without pictures. The picture below is during testing. The Android terminal pulls the RTSP stream, then calls back the YUV data, and injects it into the lightweight RTSP service through the push interface, and then the Windows platform pulls the light RTSP URL, overall, millisecond delay:

Let’s talk about pulling the RTSP stream first. It should be noted that if you don’t want to play it, you can set the second parameter to null when SetSurface(). If you don’t need audio, just set SetMute to 1, because you need to call back YUV. Then set the I420 callback. If you need RGB, just turn on the RGB callback.

private boolean StartPlay()

{

if (!OpenPullHandle())

return false;

// If the second parameter is set to null, play pure audio

libPlayer.SmartPlayerSetSurface(playerHandle, sSurfaceView);

libPlayer.SmartPlayerSetRenderScaleMode(playerHandle, 1);

// libPlayer.SmartPlayerSetExternalRender(playerHandle, new

// RGBAExternalRender());

libPlayer.SmartPlayerSetExternalRender(playerHandle, new

I420ExternalRender());

libPlayer.SmartPlayerSetFastStartup(playerHandle, isFastStartup ? 1 : 0);

libPlayer.SmartPlayerSetAudioOutputType(playerHandle, 1);

if (isMute) {

libPlayer.SmartPlayerSetMute(playerHandle, isMute ? 1

: 0);

}

if (isHardwareDecoder)

{

int isSupportH264HwDecoder = libPlayer

.SetSmartPlayerVideoHWDecoder(playerHandle, 1);

int isSupportHevcHwDecoder = libPlayer.SetSmartPlayerVideoHevcHWDecoder(playerHandle, 1);

Log.i(TAG, "isSupportH264HwDecoder: " + isSupportH264HwDecoder + ", isSupportHevcHwDecoder: " + isSupportHevcHwDecoder);

}

libPlayer.SmartPlayerSetLowLatencyMode(playerHandle, isLowLatency ? 1

: 0);

libPlayer.SmartPlayerSetRotation(playerHandle, rotate_degrees);

int iPlaybackRet = libPlayer

.SmartPlayerStartPlay(playerHandle);

if (iPlaybackRet != 0) {

Log.e(TAG, "StartPlay failed!");

if ( !isPulling & amp; & amp; !isRecording & amp; & amp; !isPushing & amp; & amp; !isRTSPPublisherRunning)

{

releasePlayerHandle();

}

return false;

}

isPlaying = true;

return true;

}

The corresponding implementation of OpenPullHandle() is as follows:

/*

* SmartRelayDemo.java

* Created: daniusdk.com

*/

private boolean OpenPullHandle()

{

//if (playerHandle != 0) {

// return true;

//}

if(isPulling || isPlaying || isRecording)

return true;

//playbackUrl = "rtsp://xxxx";

if (playbackUrl == null) {

Log.e(TAG, "playback URL is null...");

return false;

}

playerHandle = libPlayer.SmartPlayerOpen(myContext);

if (playerHandle == 0) {

Log.e(TAG, "playerHandle is nil..");

return false;

}

libPlayer.SetSmartPlayerEventCallbackV2(playerHandle,

new EventHandlePlayerV2());

libPlayer.SmartPlayerSetBuffer(playerHandle, playBuffer);

// set report download speed

libPlayer.SmartPlayerSetReportDownloadSpeed(playerHandle, 1, 5);

//Set RTSP timeout

int rtsp_timeout = 12;

libPlayer.SmartPlayerSetRTSPTimeout(playerHandle, rtsp_timeout);

//Set RTSP TCP/UDP mode automatic switching

int is_auto_switch_tcp_udp = 1;

libPlayer.SmartPlayerSetRTSPAutoSwitchTcpUdp(playerHandle, is_auto_switch_tcp_udp);

libPlayer.SmartPlayerSaveImageFlag(playerHandle, 1);

// It only used when playback RTSP stream..

//libPlayer.SmartPlayerSetRTSPTcpMode(playerHandle, 1);

libPlayer.SmartPlayerSetUrl(playerHandle, playbackUrl);

return true;

}

The Event callback status of the streaming end is as follows. The streaming end mainly focuses on the link status and real-time download speed:

class EventHandlePlayerV2 implements NTSmartEventCallbackV2 {

@Override

public void onNTSmartEventCallbackV2(long handle, int id, long param1,

long param2, String param3, String param4, Object param5) {

//Log.i(TAG, "EventHandleV2: handle=" + handle + " id:" + id);

String player_event = "";

switch (id) {

case NTSmartEventID.EVENT_DANIULIVE_ERC_PLAYER_STARTED:

player_event = "Start..";

break;

case NTSmartEventID.EVENT_DANIULIVE_ERC_PLAYER_CONNECTING:

player_event = "Connecting..";

break;

case NTSmartEventID.EVENT_DANIULIVE_ERC_PLAYER_CONNECTION_FAILED:

player_event = "Connection failed..";

break;

case NTSmartEventID.EVENT_DANIULIVE_ERC_PLAYER_CONNECTED:

player_event = "Connection successful..";

break;

case NTSmartEventID.EVENT_DANIULIVE_ERC_PLAYER_DISCONNECTED:

player_event = "Connection disconnected..";

break;

case NTSmartEventID.EVENT_DANIULIVE_ERC_PLAYER_STOP:

player_event = "Stop playing..";

break;

case NTSmartEventID.EVENT_DANIULIVE_ERC_PLAYER_RESOLUTION_INFO:

player_event = "Resolution information: width: " + param1 + ", height: " + param2;

break;

case NTSmartEventID.EVENT_DANIULIVE_ERC_PLAYER_NO_MEDIADATA_RECEIVED:

player_event = "Cannot receive media data, maybe the URL is wrong..";

break;

case NTSmartEventID.EVENT_DANIULIVE_ERC_PLAYER_SWITCH_URL:

player_event = "Switch playback URL..";

break;

case NTSmartEventID.EVENT_DANIULIVE_ERC_PLAYER_CAPTURE_IMAGE:

player_event = "Snapshot: " + param1 + " Path: " + param3;

if (param1 == 0) {

player_event = player_event + ", Snapshot taken successfully";

} else {

player_event = player_event + ", Failed to take snapshot";

}

break;

case NTSmartEventID.EVENT_DANIULIVE_ERC_PLAYER_RECORDER_START_NEW_FILE:

player_event = "[record]Start a new video file: " + param3;

break;

case NTSmartEventID.EVENT_DANIULIVE_ERC_PLAYER_ONE_RECORDER_FILE_FINISHED:

player_event = "[record] has generated a video file: " + param3;

break;

case NTSmartEventID.EVENT_DANIULIVE_ERC_PLAYER_START_BUFFERING:

Log.i(TAG, "Start Buffering");

break;

case NTSmartEventID.EVENT_DANIULIVE_ERC_PLAYER_BUFFERING:

Log.i(TAG, "Buffering:" + param1 + "%");

break;

case NTSmartEventID.EVENT_DANIULIVE_ERC_PLAYER_STOP_BUFFERING:

Log.i(TAG, "Stop Buffering");

break;

case NTSmartEventID.EVENT_DANIULIVE_ERC_PLAYER_DOWNLOAD_SPEED:

player_event = "download_speed:" + param1 + "Byte/s" + ", "

+ (param1 * 8 / 1000) + "kbps" + ", " + (param1 / 1024)

+ "KB/s";

break;

case NTSmartEventID.EVENT_DANIULIVE_ERC_PLAYER_RTSP_STATUS_CODE:

Log.e(TAG, "RTSP error code received, please make sure username/password is correct, error code:" + param1);

player_event = "RTSP error code:" + param1;

break;

}

}

}

The next step is to start the RTSP service:

//Start/stop RTSP service

class ButtonRtspServiceListener implements OnClickListener {

public void onClick(View v) {

if (isRTSPServiceRunning) {

stopRtspService();

btnRtspService.setText("Start RTSP service");

btnRtspPublisher.setEnabled(false);

isRTSPServiceRunning = false;

return;

}

if(!OpenPushHandle())

{

return;

}

Log.i(TAG, "onClick start rtsp service..");

rtsp_handle_ = libPublisher.OpenRtspServer(0);

if (rtsp_handle_ == 0) {

Log.e(TAG, "Failed to create rtsp server instance! Please check the validity of the SDK");

} else {

int port = 8554;

if (libPublisher.SetRtspServerPort(rtsp_handle_, port) != 0) {

libPublisher.CloseRtspServer(rtsp_handle_);

rtsp_handle_ = 0;

Log.e(TAG, "Failed to create rtsp server port! Please check whether the port is duplicated or the port is not in the range!");

}

//String user_name = "admin";

//String password = "12345";

//libPublisher.SetRtspServerUserNamePassword(rtsp_handle_, user_name, password);

if (libPublisher.StartRtspServer(rtsp_handle_, 0) == 0) {

Log.i(TAG, "Start rtsp server successfully!");

} else {

libPublisher.CloseRtspServer(rtsp_handle_);

rtsp_handle_ = 0;

Log.e(TAG, "Failed to start rtsp server! Please check whether the set port is occupied!");

}

btnRtspService.setText("Stop RTSP service");

btnRtspPublisher.setEnabled(true);

isRTSPServiceRunning = true;

}

}

}

If you need to stop the service, the corresponding implementation is as follows:

//Stop RTSP service

private void stopRtspService() {

if(!isRTSPServiceRunning)

return;

if (libPublisher != null & amp; & amp; rtsp_handle_ != 0) {

libPublisher.StopRtspServer(rtsp_handle_);

libPublisher.CloseRtspServer(rtsp_handle_);

rtsp_handle_ = 0;

}

if(!isPushing)

{

releasePublisherHandle();

}

isRTSPServiceRunning = false;

}

Publish and stop publishing RTSP streams:

private boolean StartRtspStream()

{

if (isRTSPPublisherRunning)

return false;

String rtsp_stream_name = "stream1";

libPublisher.SetRtspStreamName(publisherHandle, rtsp_stream_name);

libPublisher.ClearRtspStreamServer(publisherHandle);

libPublisher.AddRtspStreamServer(publisherHandle, rtsp_handle_, 0);

if (libPublisher.StartRtspStream(publisherHandle, 0) != 0)

{

Log.e(TAG, "Call to publish rtsp stream interface failed!");

if (!isPushing)

{

libPublisher.SmartPublisherClose(publisherHandle);

publisherHandle = 0;

}

return false;

}

isRTSPPublisherRunning = true;

return true;

}

//Stop publishing RTSP stream

private void stopRtspPublisher()

{

if(!isRTSPPublisherRunning)

return;

isRTSPPublisherRunning = false;

if (null == libPublisher || 0 == publisherHandle)

return;

libPublisher.StopRtspStream(publisherHandle);

if (!isPushing & amp; & amp; !isRTSPServiceRunning)

{

releasePublisherHandle();

}

}

Because the YUV or RGB data needs to be re-encoded after processing, the push end needs to be set at this time to set the encoding parameters:

private boolean OpenPushHandle() {

if(publisherHandle != 0)

{

return true;

}

publisherHandle = libPublisher.SmartPublisherOpen(myContext, audio_opt, video_opt,

videoWidth, videoHeight);

if (publisherHandle == 0) {

Log.e(TAG, "sdk open failed!");

return false;

}

Log.i(TAG, "publisherHandle=" + publisherHandle);

int fps = 20;

int gop = fps * 1;

int videoEncodeType = 1; //1: h.264 hard-coded 2: H.265 hard-coded

if(videoEncodeType == 1) {

int h264HWKbps = setHardwareEncoderKbps(true, videoWidth, videoHeight);

h264HWKbps = h264HWKbps*fps/25;

Log.i(TAG, "h264HWKbps: " + h264HWKbps);

int isSupportH264HWEncoder = libPublisher

.SetSmartPublisherVideoHWEncoder(publisherHandle, h264HWKbps);

if (isSupportH264HWEncoder == 0) {

libPublisher.SetNativeMediaNDK(publisherHandle, 0);

libPublisher.SetVideoHWEncoderBitrateMode(publisherHandle, 1); // 0:CQ, 1:VBR, 2:CBR

libPublisher.SetVideoHWEncoderQuality(publisherHandle, 39);

libPublisher.SetAVCHWEncoderProfile(publisherHandle, 0x08); // 0x01: Baseline, 0x02: Main, 0x08: High

// libPublisher.SetAVCHWEncoderLevel(publisherHandle, 0x200); // Level 3.1

// libPublisher.SetAVCHWEncoderLevel(publisherHandle, 0x400); // Level 3.2

// libPublisher.SetAVCHWEncoderLevel(publisherHandle, 0x800); // Level 4

libPublisher.SetAVCHWEncoderLevel(publisherHandle, 0x1000); // Level 4.1 In most cases, this is enough

//libPublisher.SetAVCHWEncoderLevel(publisherHandle, 0x2000); // Level 4.2

// libPublisher.SetVideoHWEncoderMaxBitrate(publisherHandle, ((long)h264HWKbps)*1300);

Log.i(TAG, "Great, it supports h.264 hardware encoder!");

}

}

else if (videoEncodeType == 2) {

int hevcHWKbps = setHardwareEncoderKbps(false, videoWidth, videoHeight);

hevcHWKbps = hevcHWKbps*fps/25;

Log.i(TAG, "hevcHWKbps: " + hevcHWKbps);

int isSupportHevcHWEncoder = libPublisher

.SetSmartPublisherVideoHevcHWEncoder(publisherHandle, hevcHWKbps);

if (isSupportHevcHWEncoder == 0) {

libPublisher.SetNativeMediaNDK(publisherHandle, 0);

libPublisher.SetVideoHWEncoderBitrateMode(publisherHandle, 0); // 0:CQ, 1:VBR, 2:CBR

libPublisher.SetVideoHWEncoderQuality(publisherHandle, 39);

// libPublisher.SetVideoHWEncoderMaxBitrate(publisherHandle, ((long)hevcHWKbps)*1200);

Log.i(TAG, "Great, it supports hevc hardware encoder!");

}

}

libPublisher.SetSmartPublisherEventCallbackV2(publisherHandle, new EventHandlePublisherV2());

libPublisher.SmartPublisherSetGopInterval(publisherHandle, gop);

libPublisher.SmartPublisherSetFPS(publisherHandle, fps);

return true;

}

The implementation of I420ExternalRender is as follows. Here you can get the YUV data of the RTSP stream, and then after processing, you can call the push end’s PostLayerImageI420ByteBuffer() to deliver it to the lightweight RTSP service or the RTMP push end to encode and send it out.

class I420ExternalRender implements NTExternalRender {

// public static final int NT_FRAME_FORMAT_RGBA = 1;

// public static final int NT_FRAME_FORMAT_ABGR = 2;

// public static final int NT_FRAME_FORMAT_I420 = 3;

private int width_ = 0;

private int height_ = 0;

private int y_row_bytes_ = 0;

private int u_row_bytes_ = 0;

private int v_row_bytes_ = 0;

private ByteBuffer y_buffer_ = null;

private ByteBuffer u_buffer_ = null;

private ByteBuffer v_buffer_ = null;

@Override

public int getNTFrameFormat() {

Log.i(TAG, "I420ExternalRender::getNTFrameFormat return "

+ NT_FRAME_FORMAT_I420);

return NT_FRAME_FORMAT_I420;

}

@Override

public void onNTFrameSizeChanged(int width, int height) {

width_ = width;

height_ = height;

y_row_bytes_ = (width_ + 15) & amp; (~15);

u_row_bytes_ = ((width_ + 1) / 2 + 15) & amp; (~15);

v_row_bytes_ = ((width_ + 1) / 2 + 15) & amp; (~15);

y_buffer_ = ByteBuffer.allocateDirect(y_row_bytes_ * height_);

u_buffer_ = ByteBuffer.allocateDirect(u_row_bytes_

* ((height_ + 1) / 2));

v_buffer_ = ByteBuffer.allocateDirect(v_row_bytes_

* ((height_ + 1) / 2));

Log.i(TAG, "I420ExternalRender::onNTFrameSizeChanged width_="

+ width_ + " height_=" + height_ + " y_row_bytes_="

+ y_row_bytes_ + " u_row_bytes_=" + u_row_bytes_

+ " v_row_bytes_=" + v_row_bytes_);

}

@Override

public ByteBuffer getNTPlaneByteBuffer(int index) {

if (index == 0) {

return y_buffer_;

} else if (index == 1) {

return u_buffer_;

} else if (index == 2) {

return v_buffer_;

} else {

Log.e(TAG, "I420ExternalRender::getNTPlaneByteBuffer index error:" + index);

return null;

}

}

@Override

public int getNTPlanePerRowBytes(int index) {

if (index == 0) {

return y_row_bytes_;

} else if (index == 1) {

return u_row_bytes_;

} else if (index == 2) {

return v_row_bytes_;

} else {

Log.e(TAG, "I420ExternalRender::getNTPlanePerRowBytes index error:" + index);

return 0;

}

}

public void onNTRenderFrame(int width, int height, long timestamp)

{

if ( y_buffer_ == null )

return;

\t\t

if ( u_buffer_ == null )

return;

\t\t

if ( v_buffer_ == null )

return;

\t\t

y_buffer_.rewind();

u_buffer_.rewind();

v_buffer_.rewind();

\t\t

if( isPushing || isRTSPPublisherRunning )

{

libPublisher.PostLayerImageI420ByteBuffer(publisherHandle, 0, 0, 0,

y_buffer_, 0, y_row_bytes_,

u_buffer_, 0, u_row_bytes_,

v_buffer_, 0, v_row_bytes_,

width_, height_, 0, 0,

960, 540, 0,0);

}

}

}

If the lightweight service starts normally, the URL of rtsp will be called back:

class EventHandlePublisherV2 implements NTSmartEventCallbackV2 {

@Override

public void onNTSmartEventCallbackV2(long handle, int id, long param1, long param2, String param3, String param4, Object param5) {

Log.i(TAG, "EventHandlePublisherV2: handle=" + handle + " id:" + id);

String publisher_event = "";

switch (id) {

....

case NTSmartEventID.EVENT_DANIULIVE_ERC_PUBLISHER_RTSP_URL:

publisher_event = "RTSP service URL: " + param3;

break;

}

}

}

Technical summary

The above is a rough process. After pulling the stream from RTSP to data processing, it is then sent to the lightweight RTSP service, and then the player pulls the stream from the lightweight RTSP server. If the processing delay for the YUV or RGB algorithm is not large, The overall latency can easily reach the millisecond level, meeting the technical requirements of most scenarios.