Order

Uploading large files, resuming uploads at breakpoints, and uploading in seconds, as technical points of high-frequency investigation, most people know about it but don’t know why. Let’s explore it from the perspective of front-end and back-end together.

I believe that as long as you spend a little time to understand it seriously, you will find that you have spent some time. . .

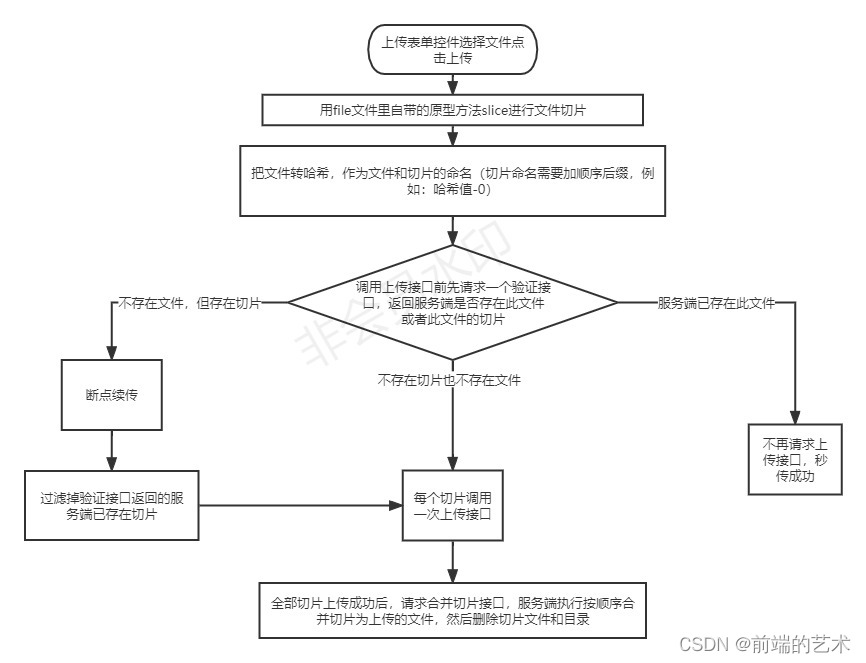

Thought diagram

Front-end core code fragment

Slice files according to the specified size

createChunk(file, size = 512 * 1024) {<!-- -->

const chunkList = [];

let cur = 0;

while (cur < file. size) {<!-- -->

// Use the slice method to slice

chunkList. push({<!-- --> file: file. slice(cur, cur + size) });

cur += size;

}

return chunkList;

}

The file uses js-md5 to hash

handleFileChange(e) {<!-- -->

let fileReader = new FileReader();

fileReader.readAsDataURL(e.target.files[0]);

fileReader.onload = function (e2) {<!-- -->

const hexHash = md5(e2.target.result) + '.' + that.fileObj.file.name.split('.').pop();

};

}

After the

slice is converted into a form object, it is configured as a request list.

const requestList = noUploadChunks.map(({<!-- --> file, fileName, index, chunkName }) => {<!-- -->

const formData = new FormData();

formData.append("file", file);

formData.append("fileName", fileName);

formData.append("chunkName", chunkName);

return {<!-- --> formData, index };

})

.map(({<!-- --> formData, index }) =>

axiosRequest({<!-- -->

url: "http://localhost:3000/upload",

data: formData

})

)

Full front-end code

<template>

<div>

<input type="file" @change="handleFileChange" />

<el-button @click="uploadChunks"> Upload </el-button>

<!-- <el-button @click="pauseUpload"> Pause </el-button> -->

<div style="width: 300px">

total progress:

<el-progress :percentage="tempPercent"></el-progress>

Slicing progress:

<div v-for="(item,index) in fileObj.chunkList" :key="index">

<span>{<!-- -->{<!-- --> item.chunkName }}:</span>

<el-progress :percentage="item.percent"></el-progress>

</div>

</div>

</div>

</template>

<script>

import axios from "axios";

import md5 from 'js-md5'

const CancelToken = axios. CancelToken;

let source = CancelToken. source();

function axiosRequest({<!-- -->

url,

method = "post",

data,

headers = {<!-- -->},

onUploadProgress = (e) => e, // progress callback

}) {<!-- -->

return new Promise((resolve, reject) => {<!-- -->

axios[method](url, data, {<!-- -->

headers,

onUploadProgress, // incoming monitoring progress callback

cancelToken: source.token

})

.then((res) => {<!-- -->

resolve(res);

})

.catch((err) => {<!-- -->

reject(err);

});

});

}

export default {<!-- -->

data() {<!-- -->

return {<!-- -->

fileObj: {<!-- -->

file: null,

chunkList:[]

},

tempPercent: 0

};

},

methods: {<!-- -->

handleFileChange(e) {<!-- -->

const [file] = e. target. files;

if (!file) return;

this.fileObj.file = file;

const fileObj = this. fileObj;

if (!fileObj.file) return;

const chunkList = this.createChunk(fileObj.file);

console.log(chunkList); // see what chunkList looks like

let that = this

// Get the hash value of this video as the name

let fileReader = new FileReader();

fileReader.readAsDataURL(e.target.files[0]);

fileReader.onload = function (e2) {<!-- -->

const hexHash = md5(e2.target.result) + '.' + that.fileObj.file.name.split('.').pop();

that.fileObj.name = hexHash

that.fileObj.chunkList = chunkList.map(({<!-- --> file }, index) => ({<!-- -->

file,

size: file. size,

percent: 0,

chunkName: `${<!-- -->hexHash}-${<!-- -->index}`,

fileName: hexHash,

index,

}))

};

},

createChunk(file, size = 512 * 1024) {<!-- -->

const chunkList = [];

let cur = 0;

while (cur < file. size) {<!-- -->

// Use the slice method to slice

chunkList. push({<!-- --> file: file. slice(cur, cur + size) });

cur += size;

}

return chunkList;

},

async uploadChunks() {<!-- -->

const {<!-- -->uploadedList,shouldUpload} = await this.verifyUpload()

// if the file exists

if(!shouldUpload){<!-- -->

console.log('Second transmission succeeded')

this.fileObj.chunkList.forEach(item => {<!-- -->

item.percent = 100

});

return

}

let noUploadChunks = []

if(uploadedList & amp; & amp; uploadedList.length>0 & amp; & amp;uploadedList.length !== this.fileObj.chunkList.length){<!-- -->

// If slice exists. Only upload slices without

noUploadChunks = this.fileObj.chunkList.filter(item=>{<!-- -->

if(uploadedList.includes(item.chunkName)){<!-- -->

item.percent = 100

}

return !uploadedList.includes(item.chunkName)

})

}else{<!-- -->

noUploadChunks = this.fileObj.chunkList

}

const requestList = noUploadChunks.map(({<!-- --> file, fileName, index, chunkName }) => {<!-- -->

const formData = new FormData();

formData.append("file", file);

formData.append("fileName", fileName);

formData.append("chunkName", chunkName);

return {<!-- --> formData, index };

})

.map(({<!-- --> formData, index }) =>

axiosRequest({<!-- -->

url: "http://localhost:3000/upload",

data: formData,

onUploadProgress: this.createProgressHandler(

this.fileObj.chunkList[index]

), // incoming monitoring upload progress callback

})

);

await Promise.all(requestList); // use Promise.all to request

this. mergeChunks()

},

createProgressHandler(item) {<!-- -->

return (e) => {<!-- -->

// Set the progress percentage of each slice

item.percent = parseInt(String((e.loaded / e.total) * 100));

};

},

mergeChunks(size = 512 * 1024) {<!-- -->

axiosRequest({<!-- -->

url: "http://localhost:3000/merge",

headers: {<!-- -->

"content-type": "application/json",

},

data: JSON.stringify({<!-- -->

size,

fileName: this.fileObj.name

}),

});

},

pauseUpload() {<!-- -->

source.cancel("Interrupt upload!");

source = CancelToken.source(); // Reset the source to ensure that the transfer can continue

},

async verifyUpload () {<!-- -->

const {<!-- --> data } = await axiosRequest({<!-- -->

url: "http://localhost:3000/verify",

headers: {<!-- -->

"content-type": "application/json",

},

data: JSON.stringify({<!-- -->

fileName: this.fileObj.name

}),

});

return data

}

},

computed: {<!-- -->

totalPercent() {<!-- -->

const fileObj = this. fileObj;

if (fileObj. chunkList. length === 0) return 0;

const loaded = fileObj. chunkList

.map(({<!-- --> size, percent }) => size * percent)

.reduce((pre, next) => pre + next);

return parseInt((loaded / fileObj. file. size). toFixed(2));

},

},

watch: {<!-- -->

totalPercent (newVal) {<!-- -->

if (newVal > this.tempPercent) this.tempPercent = newVal

}

},

};

</script>

<style lang="scss" scoped></style>

Server receiving code (simulated with node.js)

const http = require("http");

const path = require("path");

const fse = require("fs-extra");

const multiparty = require("multiparty");

const server = http.createServer();

const UPLOAD_DIR = path.resolve(__dirname, ".", `qiepian`); // slice storage directory

server.on("request", async (req, res) => {<!-- -->

res.setHeader("Access-Control-Allow-Origin", "*");

res.setHeader("Access-Control-Allow-Headers", "*");

res.setHeader('Content-Type','text/html; charset=utf-8');

if (req. method === "OPTIONS") {<!-- -->

res.status = 200;

res.end();

return;

}

console.log(req.url);

if (req.url === "/upload") {<!-- -->

const multipart = new multiparty. Form();

multipart. parse(req, async (err, fields, files) => {<!-- -->

if (err) {<!-- -->

console.log("errrrr", err);

return;

}

const [file] = files. file;

const [fileName] = fields. fileName;

const [chunkName] = fields. chunkName;

// Path to the folder where slices are saved, such as Zhang Yuan-Guest.flac-chunks

const chunkDir = path.resolve(UPLOAD_DIR, `${<!-- -->fileName}-chunks`);

// // The slice directory does not exist, create a slice directory

if (!fse.existsSync(chunkDir)) {<!-- -->

await fse.mkdirs(chunkDir);

}

// move the slice to the slice folder

await fse.move(file.path, `${<!-- -->chunkDir}/${<!-- -->chunkName}`);

res.end(

JSON.stringify({<!-- -->

code: 0,

message: "Slice uploaded successfully",

})

);

});

}

// Merge slices

// Receive request parameters

const resolvePost = (req) =>

new Promise((res) => {<!-- -->

let chunk = "";

req.on("data", (data) => {<!-- -->

chunk + = data;

});

req.on("end", () => {<!-- -->

res(JSON. parse(chunk));

});

});

const pipeStream = (path, writeStream) => {<!-- -->

console.log("path", path);

return new Promise((resolve) => {<!-- -->

const readStream = fse. createReadStream(path);

readStream.on("end", () => {<!-- -->

// fse.unlinkSync(path); // delete slice file

resolve();

});

readStream.pipe(writeStream);

});

};

// Merge slices

const mergeFileChunk = async (filePath, fileName, size) => {<!-- -->

// filePath: Where do you merge the slices, the path

const chunkDir = path.resolve(UPLOAD_DIR, `${<!-- -->fileName}-chunks`);

let chunkPaths = null;

// Get all the slices in the slice folder and return an array

chunkPaths = await fse. readdir(chunkDir);

// Sort according to the subscript of the slice

// Otherwise, the order of directly reading the directory may be confused

chunkPaths.sort((a, b) => a.split("-")[1] - b.split("-")[1]);

const arr = chunkPaths. map((chunkPath, index) => {<!-- -->

return pipeStream(

path.resolve(chunkDir, chunkPath),

// Create a writable stream at the specified location

fse.createWriteStream(filePath, {<!-- -->

start: index * size,

end: (index + 1) * size,

})

);

});

await Promise. all(arr);

};

if (req.url === "/merge") {<!-- -->

const data = await resolvePost(req);

const {<!-- --> fileName, size } = data;

const filePath = path.resolve(UPLOAD_DIR, fileName);

await mergeFileChunk(filePath, fileName, size);

res.end(

JSON.stringify({<!-- -->

code: 0,

message: "File merged successfully",

})

);

}

if (req.url === "/verify") {<!-- -->

// Return the list of uploaded slice names

const createUploadedList = async fileName =>

fse.existsSync(path.resolve(UPLOAD_DIR, fileName))

? await fse.readdir(path.resolve(UPLOAD_DIR, fileName))

: [];

const data = await resolvePost(req);

const {<!-- --> fileName } = data;

const filePath = path.resolve(UPLOAD_DIR, fileName);

console. log(filePath)

if (fse. existsSync(filePath)) {<!-- -->

res.end(

JSON.stringify({<!-- -->

shouldUpload: false

})

);

} else {<!-- -->

res.end(

JSON.stringify({<!-- -->

shouldUpload: true,

uploadedList: await createUploadedList(`${<!-- -->fileName}-chunks`)

})

);

}

}

});

server.listen(3000, () => console.log("Listening on port 3000"));

demo test

- npm installs the missing dependencies,

- Run the front-end code.

- node xxx.js runs the backend code.