Table of Contents

1. Project Scenario:

2. Problem description

3. Reason analysis:

Fourth, the solution:

1. Project Scenario:

After installing spark and anaconda, I was going to use python for spark programming. I reported this error when I ran the python program with the python command. Here is how to solve it.

2. Description of the problem

The spark program written:

from pyspark import SparkContext

sc = SparkContext('local', 'test')

logFile = "file:///usr/local/spark/README.md"

logData = sc.textFile(logFile, 2).cache()

numAs = logData.filter(lambda line: 'a' in line).count()

numBs = logData.filter(lambda line: 'b' in line).count()

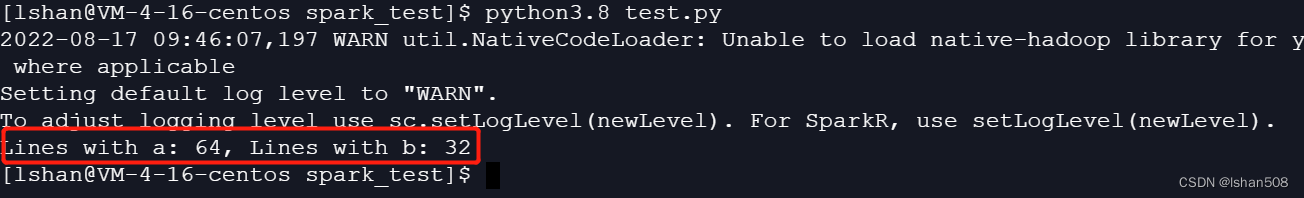

print('Lines with a: %s, Lines with b: %s' % (numAs, numBs))

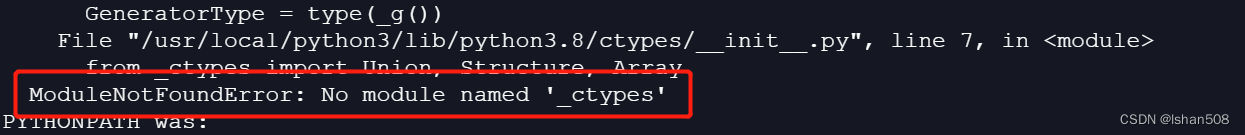

Running error:

3. Reason analysis:

I checked the information and found that the development link library package of the external function library (libffi) was missing in the CentOS7 system, and was used when running the spark program, so this error was reported.

Reference article: link

4. Solution:

1. Install external function library (libffi)

yum install libffi-devel -y

2. The environment variables that have been configured do not need to be changed, just delete anaconda3 and install it again.

2.1. Delete

rm -rf anaconda3

2.2, installation

bash ... #here...is your own anaconda installation package

Run the python file again, it runs successfully: