1. General meaning

The smaller the loss, the better

Popular science: Back propagation means trying to adjust the parameters in the network process so that the final loss will become smaller (because the parameters are derived from loss, which is in the opposite order to the network, so it is called back propagation), and The understanding of gradient can be directly regarded as “slope”

The meaning of the loss function:

2. Read the documentation

1, L1–loss

torch.nn.L1Loss(size_average=None, reduce=None, reduction='mean')

———————— ———————————————–

———————— ———————————————–

Look at input and output

Input:

1. N: batch size

2. , can be data of any dimension

Template target:

Same form as input

Output, if reduction is not specified, has the same form as input

Look at the code

(1) Create new data

(2) Use reshape to expand the dimensions to four dimensions

import torch.nn import torch from torch.nn import L1Loss inputs=torch.tensor([1,2,3]) targets=torch.tensor([1,2,5]) print(inputs.shape) inputs=torch.reshape(inputs,[1,1,1,3]) targets=torch.reshape(targets,[1,1,1,3]) print(inputs.shape) loss=L1Loss() result=loss(inputs,targets) print(result)

An error is reported, saying that our numerical type does not meet the requirements.

If you want floating point type, then we modify the type and use dtype

inputs=torch.tensor([1,2,3],dtype=torch.float32) targets=torch.tensor([1,2,5],dtype=torch.float32)

This is the complete code:

import torch.nn import torch from torch.nn import L1Loss inputs=torch.tensor([1,2,3],dtype=torch.float32) targets=torch.tensor([1,2,5],dtype=torch.float32) print(inputs.shape) inputs=torch.reshape(inputs,[1,1,1,3]) targets=torch.reshape(targets,[1,1,1,3]) print(inputs.shape) loss=L1Loss() result=loss(inputs,targets) print(result)

You can see that the result calculated through the loss function is exactly 2/3=0.6667

Now we specify reduction as summation

loss=L1Loss(reduction="sum") result=loss(inputs,targets) print(result)

The result is obviously 2

2. MSEloss

torch.nn.MSELoss(size_average=None, reduce=None, reduction=mean’)

Look at the code

import torch import torch.nn from torch.nn import MSELoss inputs=torch.tensor([1,2,3],dtype=torch.float32) targets=torch.tensor([1,2,5],dtype=torch.float32) print(inputs.shape) inputs=torch.reshape(inputs,[1,1,1,3]) targets=torch.reshape(targets,[1,1,1,3]) print(inputs.shape) loss=MSELoss() result=loss(inputs,targets) print(result)

The output result is 1.333, which is consistent with our calculation.

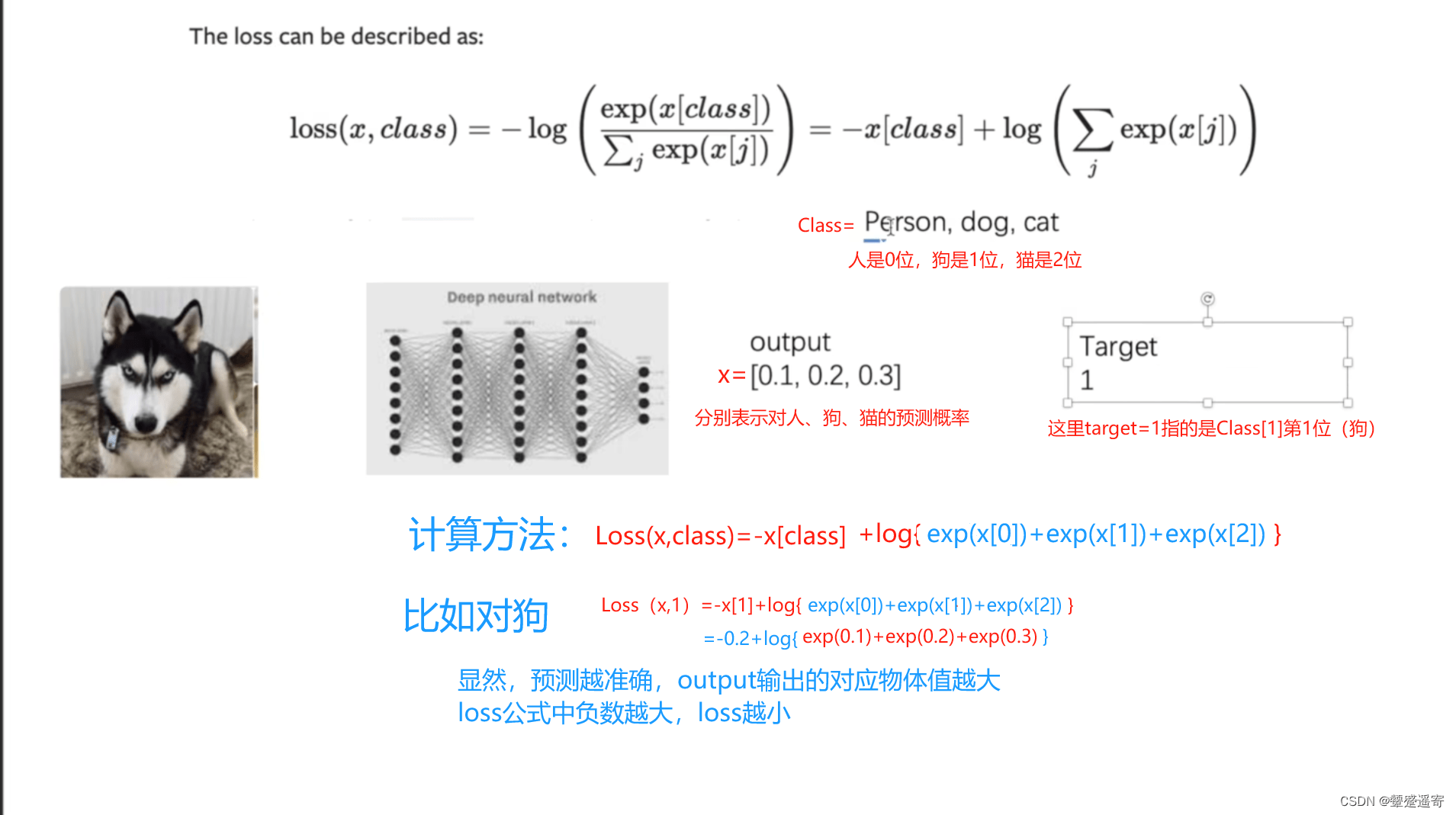

3. CrossEntropyLoss

Cross entropy: a loss function commonly used for classification

t is useful when training a classification problem with C classes. Commonly used for training classification problems (the number of categories is C)

Calculated as follows;

Give examples

Look at the code

First look at the shapes required in the document

import torch from torch.nn import CrossEntropyLoss x=torch.tensor([0.1,0.2,0.3]) where x is one-dimensional y=torch.tensor([1]) print(x.shape) x=torch.reshape(x,(1,3)) print(x.shape) //Because x is the input in CrossEntropyLoss, the form is two-dimensional (batchisize, number of categories) y is target, so we need to turn x into two dimensions // loss_cross=CrossEntropyLoss() result_loss=loss_cross(x,y) print(result_loss) Output: torch.Size([3]) torch.Size([1, 3]) tensor(1.1019)

**For dog calculation formula:

It should be noted here that the log in the document is actually the natural logarithm of ln**

=-0.2 + ln(exp(0.1) + exp(0.2) + exp(0.3))

Correct calculation

3.1 Loss function practice

1. First copy in the neural network (sequential) built in the last study

from torch.nn import Sequential

from torch import nn

from torch.nn import Conv2d,MaxPool2d,Flatten,Linear

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1=Sequential(

Conv2d(in_channels=3,out_channels=32,kernel_size=5,stride=1,padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, 1, 2),

MaxPool2d(2),

Conv2d(32, 64, 5, 1, 2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x=self.model1(x)

return x

2. Import cifar data set

from torch.utils.data import DataLoader

dataset=torchvision.datasets.CIFAR10("./P23_dataset",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

dataloader=DataLoader(dataset,batch_size=64)

3. The for loop takes out imgs and targets. Then operate through neural network

tudui=Tudui()

for data in dataloader:

imgs,targets=data

output=tudui(imgs)

print(output)

print(targets)

4. Final:

from torch.nn import Sequential

from torch import nn

from torch.nn import Conv2d,MaxPool2d,Flatten,Linear

import torchvision

from torch.utils.data import DataLoader

dataset=torchvision.datasets.CIFAR10("./P23_dataset",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

dataloader=DataLoader(dataset,batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1=Sequential(

Conv2d(in_channels=3,out_channels=32,kernel_size=5,stride=1,padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, 1, 2),

MaxPool2d(2),

Conv2d(32, 64, 5, 1, 2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x=self.model1(x)

return x

tudui=Tudui()

for data in dataloader:

imgs,targets=data

output=tudui(imgs)

print(output)

print(targets)

Because the batchsize is set to have more data, we changed it to 1

Look at the output results again

tensor([[ 0.0835, -0.0427, -0.1136, 0.0751, -0.1241, 0.0557, 0.0384, 0.1432,

-0.0817, 0.0711]], grad_fn=<AddmmBackward0>)

tensor([1])

tensor([[ 0.0749, -0.0500, -0.1154, 0.0736, -0.1187, 0.0532, 0.0397, 0.1437,

-0.1071, 0.0638]], grad_fn=<AddmmBackward0>)

tensor([7])

//You can see that a picture will have 10 outputs after passing through the neural network. The output values represent the linear values of the neural network for 10 different types of things.

! ! The Danmaku master learned that because there is no softmax layer, no normalization, and no activation function, the output is not probability! ~! !

//tensor[1] and tensor[7] are the serial numbers in the class list

5. Use the cross entropy in the loss function we learned today

loss=nn.CrossEntropyLoss()

for data in dataloader:

imgs,targets=data

output=tudui(imgs)

result_loss=loss(output,targets)

print(output)

print(targets)

print(result_loss)

This calculates the error between the actual output and the target

finally:

from torch.nn import Sequential

from torch import nn

from torch.nn import Conv2d,MaxPool2d,Flatten,Linear

import torchvision

from torch.utils.data import DataLoader

dataset=torchvision.datasets.CIFAR10("./P23_dataset",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

dataloader=DataLoader(dataset,batch_size=1)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1=Sequential(

Conv2d(in_channels=3,out_channels=32,kernel_size=5,stride=1,padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, 1, 2),

MaxPool2d(2),

Conv2d(32, 64, 5, 1, 2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x=self.model1(x)

return x

tudui=Tudui()

loss=nn.CrossEntropyLoss()

for data in dataloader:

imgs,targets=data

output=tudui(imgs)

result_loss=loss(output,targets)

print(output)

print(targets)

print(result_loss)

3.2 Backpropagation practice

Popular science: Back propagation means trying to adjust the parameters in the network process so that the final loss will become smaller (because the parameters are derived from loss, which is in the opposite order to the network, so it is called back propagation), and The understanding of gradient can be directly regarded as “slope”

1. Add backpropagation

Note that you add it to your own loss: result_loss.backward()

Instead of the inherited loss function

loss=nn.CrossEntropyLoss()

for data in dataloader:

imgs,targets=data

output=tudui(imgs)

result_loss=loss(output,targets)

result_loss.backward()

print("ok")

2. Add breakpoints to debug

in the following variables:

tudui–model1–protected attributes–our neural network can be found under modules

Where 0 is the first convolutional layer:

Under the weight, I found that there is a grad gradient, but there is no value yet

Then we press continue running;

The code executed is:

result_loss.backward()

We found that the gradient has data here

This allows you to calculate the gradient

In order to optimize the loss

Final code:

from torch.nn import Sequential

from torch import nn

from torch.nn import Conv2d,MaxPool2d,Flatten,Linear

import torchvision

from torch.utils.data import DataLoader

dataset=torchvision.datasets.CIFAR10("./P23_dataset",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

dataloader=DataLoader(dataset,batch_size=1)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1=Sequential(

Conv2d(in_channels=3,out_channels=32,kernel_size=5,stride=1,padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, 1, 2),

MaxPool2d(2),

Conv2d(32, 64, 5, 1, 2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

x=self.model1(x)

return x

tudui=Tudui()

loss=nn.CrossEntropyLoss()

for data in dataloader:

imgs,targets=data

output=tudui(imgs)

result_loss=loss(output,targets)

result_loss.backward()

print("ok")