Large language models (LLMs) have revolutionized natural language processing (NLP) tasks. They change the way we interact with and process text data. These powerful AI models, such as OpenAI’s GPT-4, have changed the way human-like text is understood and generated, leading to numerous breakthrough applications across a variety of industries.

LangChain is an open source framework for building applications based on large language models such as GPT. It enables applications to connect language models to other data sources and allows language models to interact with their environment.

In this blog, we will discuss the application of LangChain in LLM-based application development. With prompt LLM, it is now possible to develop AI applications faster than ever before. LLM-based applications require multiple prompts and output parsing, so we need to write a lot of code for this. LangChain makes this development process easier by leveraging fundamental abstractions found in NLP application development. The content of this blog is mainly based on the short course LangChain for LLM application development.

LangChain Framework OverviewLangChain is an open source framework for developing applications. It combines large language models (such as GPT-4) with external data. LangChain is available in Python or JavaScript (TypeScript) packages. LangChain focuses on combination and modularity. It has modular components where individual components can be used in combination with each other or separately. LangChain can be applied to multiple use cases and its modular components can be combined for more complete end-to-end applications.

Key components of LangChainLangChain emphasizes flexibility and modularity. It divides the natural language processing pipeline into independent modular components, allowing developers to customize the workflow according to their needs. The LangChain framework can be divided into six modules, each of which allows different aspects of interaction with LLM.

Model:

-

LLMs – 20+ integrations

-

Chat Models

-

Text Embedding Models – 10+ Integration Tips:

-

prompt template

-

Output parser – 5+ integrated

-

Example selector – 10+ integrations Index:

-

Document Loader: 50+ integrations

-

Text splitter: 10+ integrated

-

Vector spaces: 10+ integrated

-

Retrieval: 5 + Integration/Implementation Chain:

-

Prompt + LLM + Output parsing

-

Can be used as a building block for longer chains

-

More application-specific chains: 20+ types

-

Retrieval: 5 + Integration/Implementation Agent:

-

An agent is an end-to-end use case type that uses the model as an inference engine

-

Agent type: 5 + type

-

Agent Toolkit: 10+ implementations

Models Models are the core element of any language model application. Model refers to the language model that supports LLM. LangChain provides the building blocks to interface and integrate with any language model. LangChain provides interfaces and integrations for two types of models:

LLMs – Models that take as input a text string and return a text string Chat Models – Models that are powered by a language model but take as input a list of chat messages and return chat messages.

# This is langchain's abstraction for chatGPT API Endpoint</code><code>from langchain.chat_models import ChatOpenAI

# To control the randomness and creativity of the generated text by an LLM, </code><code># use temperature = 0.0</code><code>chat = ChatOpenAI(temperature=0.0)

Prompts are a new way of programming models. Hints refer to the style of creating inputs to be passed to the model. Prompts are usually made up of multiple components. Prompt templates and sample selectors provide key classes and functions to make it easy to build and use prompts.

We will define a template string and create a prompt template from LangChain using that template string and ChatPromptTemplate.

Prompt Template

# Define a template string

template_string = """Translate the text that is delimited by triple backticks \

into a style that is {style}. text: ```{text}```

"""

# Create a prompt template using above template string</code><code>from langchain.prompts import ChatPromptTemplate</code><code>prompt_template = ChatPromptTemplate.from_template(template_string

The above prompt_template has two fields, namely style and text. We can also extract the original template string from this prompt template. Now, if we want to translate text to some other style, we need to define our translation style and text.

customer_style = """American English in a calm and respectful tone """ customer_email = """ Arrr, I be fuming that me blender lid flew off and splattered me kitchen walls \ with smoothie! And to make matters worse, the warranty don't cover the cost of \ cleaning up me kitchen. I need yer help right now, matey! """

Here we set the style to American English, with a calm and respectful tone. We specify the prompt using an f-string directive that will translate the text enclosed in three backticks into a specific style, and then pass the above style (customer style) and text (customer email) to LLM for text translation.

# customer_message will generate the prompt and it will be passed into

# the llm to get a response.

customer_messages = prompt_template.format_messages(

style=customer_style,

text=customer_email)

# Call the LLM to translate to the style of the customer message.

customer_response = chat(customer_messages)

When we build complex applications, the tips can become quite long and detailed. Instead of using f-strings, we use hint templates because hint templates are useful abstractions that help us reuse good hints. We can create and reuse prompt templates and specify output styles and text for the model to work with.

LangChain provides tips for common operations, such as summarizing or answering questions, or connecting to a SQL database or connecting to different APIs. So by using some of LangChain’s built-in hints, we can quickly get a running application without having to design the hints ourselves.

Output Parser Another aspect of LangChain’s prompt library is that it also supports output parsing. Output parsers help obtain structured information from the output of language models. The output parser involves parsing the model’s output into a more structured format so that we can use the output to perform downstream tasks.

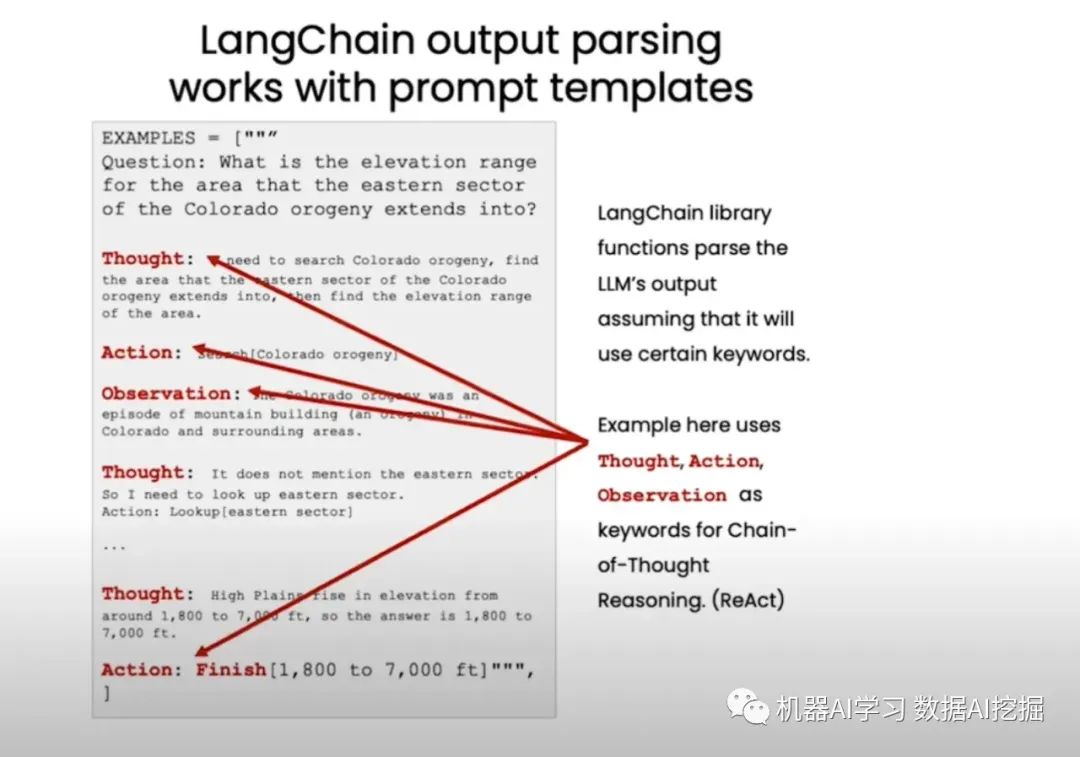

When we use LLMs to build complex applications, we often instruct the LLM to generate its output in a specific format, such as using specific keywords. LangChain’s library functions assume that LLM will use certain keywords to parse its output.

We can have an LLM output JSON and we will parse that output using LangChain like this:

We need to first define how we want the LLM output to be formatted. In this case, we define a Python dictionary with fields mentioning whether the product is a gift, the number of days required for delivery, and whether the price is affordable.

# Following is one example of the desired output.

{

"gift": False,

"delivery_days": 5,

"price_value": "pretty affordable!"

}

We can include customer reviews in the three backticks mentioned below. We can define the following comment template: ?

# This is an example of customer review and a template that try to get the desired output

customer_review = """\

Need to be actual review

"""

review_template = """\

For the following text, extract the following information:

gift: Was the item purchased as a gift for someone else? \

Answer True if yes, False if not or unknown.

delivery_days: How many days did it take for the product \

to arrive? If this information is not found, output -1.

price_value: Extract any sentences about the value or price,\

and output them as a comma separated Python list.

Format the output as JSON with the following keys:

gift

delivery_days

price_value

text: {text}

"""

# This is an example of customer review and a template that try to get the desired output

customer_review = """\

Need to be actual review

"""

review_template = """\

For the following text, extract the following information:

gift: Was the item purchased as a gift for someone else? \

Answer True if yes, False if not or unknown.

delivery_days: How many days did it take for the product \

to arrive? If this information is not found, output -1.

price_value: Extract any sentences about the value or price,\

and output them as a comma separated Python list.

Format the output as JSON with the following keys:

gift

delivery_days

price_value

text: {text}

"""

# We will wrap all review template, customer review in langchain to get output

# in desired format. We will have prompt template created from review template.

from langchain.prompts import ChatPromptTemplate

prompt_template = ChatPromptTemplate.from_template(review_template)

print(prompt_template)

# Create messages using prompt templates created earlier and customer review.

# Finally, we pass messgaes to OpenAI endpoint to get response.

messages = prompt_template.format_messages(text=customer_review)

chat = ChatOpenAI(temperature=0.0)

response = chat(messages)

print(response.content)

The above response is still not a dictionary, but a string. We need to use a Python dictionary to parse the LLM output string into a dictionary. We need to define a ResponseSchema for each field item in the Python dictionary. For the sake of brevity, I have not provided these code snippets. They can be found in my GitHub notebook. This is a very efficient way to parse LLM output into a Python dictionary, making it easier to use in downstream processing.

ReAct Framework

In the above example, LLM uses keywords such as “thought”, “action” and “observation” to perform a mental reasoning chain using a framework called ReAct. “Thoughts” are what LLM thinks. By giving LLM space to think, LLM can get more accurate conclusions. “Action” is a keyword to perform a specific action, while “observation” is a keyword to show what LLM learned from a specific action. If we have a hint that instructs LLM to use these specific keywords (such as thoughts, actions, and observations), then these keywords can be used in conjunction with the parser to extract text tagged with these keywords.

Memory Large language models cannot remember previous conversations.

When you interact with these models, they naturally won’t remember what you said before or all previous conversations, which is a problem when you build some applications (like chatbots) and want to have conversations with them.

With models, hints and parsers, we can reuse our own hint templates, share hint templates with others, or use LangChain’s built-in hint templates, which can be combined with output parsers so that we get output in a specific format, and Have the parser parse that output and store it in a specific dictionary or other data structure, making downstream processing easier.

I will discuss chains and proxies in my next blog. I will also discuss how to do question answering in our data in another of my blogs. Finally, we can see that by prompting LLMs or large language models, it is now more possible than ever to develop faster AI applications. But an application may need to prompt LLM multiple times and parse its output, so a lot of glue code needs to be written. Langchain helps simplify this process.