Foreword

- This article uses opencv and dlib libraries, and uses C++ code to implement face detection, including blink detection, mouth opening detection, and shaking head detection, which can effectively distinguish static pictures from living objects.

Effect display

Dlib library introduction

-

dlib is an open source C++ machine learning library that provides a series of algorithms and tools for image processing, face detection, face recognition, object detection, image annotation and other functions. The dlib library uses modern C++ and template metaprogramming techniques and is highly portable and extensible.

-

The main features of the dlib library include:

- Provides a series of efficient machine learning algorithms, such as support vector machines, maximum margin classifiers, random forests, etc.

- Provides algorithms for image processing, such as image filtering, image transformation, edge detection, etc.

- Provides algorithms for face detection and face recognition with high accuracy and performance.

- Algorithms for object detection and tracking are provided, such as HOG features and cascade classifiers.

- Algorithms for image annotation and image segmentation are provided, such as conditional random fields.

- Supports multi-threading and parallel computing, making full use of the performance of multi-core processors.

- With rich documentation and sample code, it is easy to learn and use.

-

The dlib library is widely used in the fields of computer vision, pattern recognition and machine learning. It is a powerful and easy-to-use machine learning library. Two interfaces, C++ and python, are provided externally.

OpenCV library introduction

- OpenCV is a cross-platform computer vision library released under the BSD license. As a popular open source library, OpenCV has a rich library of commonly used image processing functions. It is written in C/C++ language and can run on operating systems such as Linux/Windows/Mac. It can quickly implement some image processing and recognition functions. Task.

- This project mainly uses OpenCV to open the camera and read video frames.

Face recognition

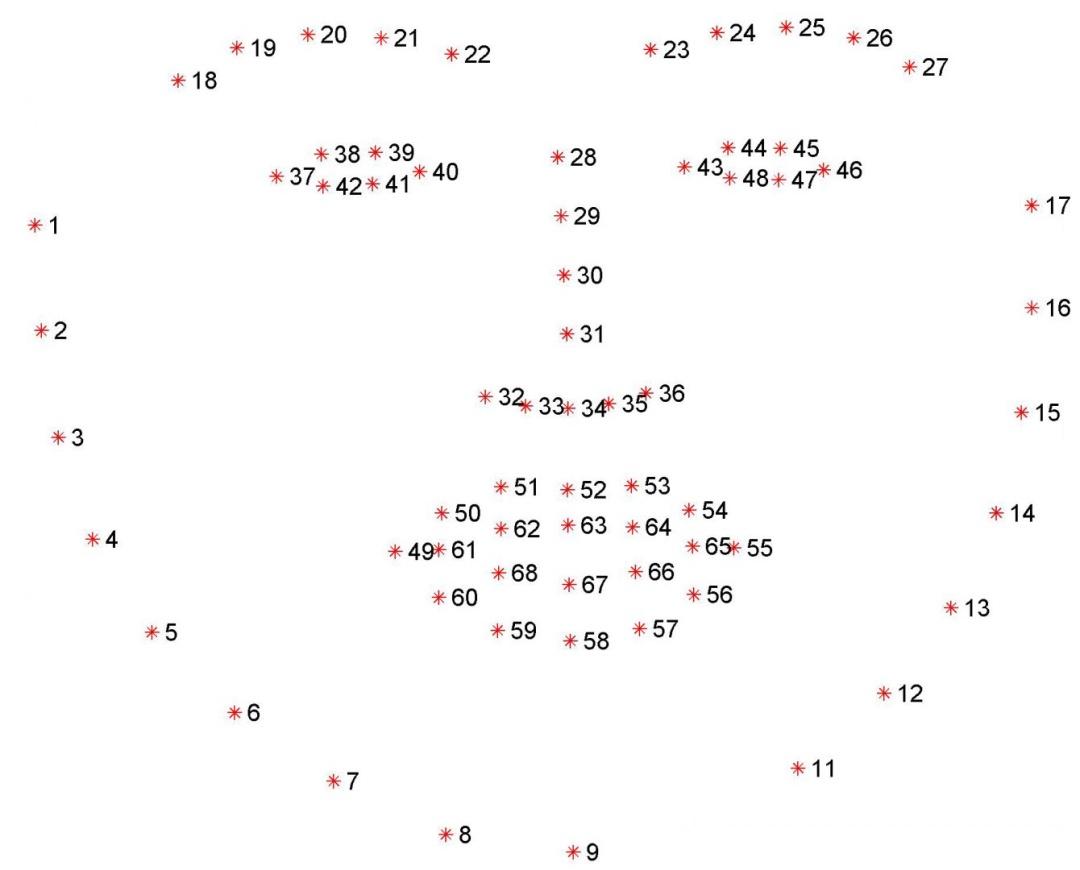

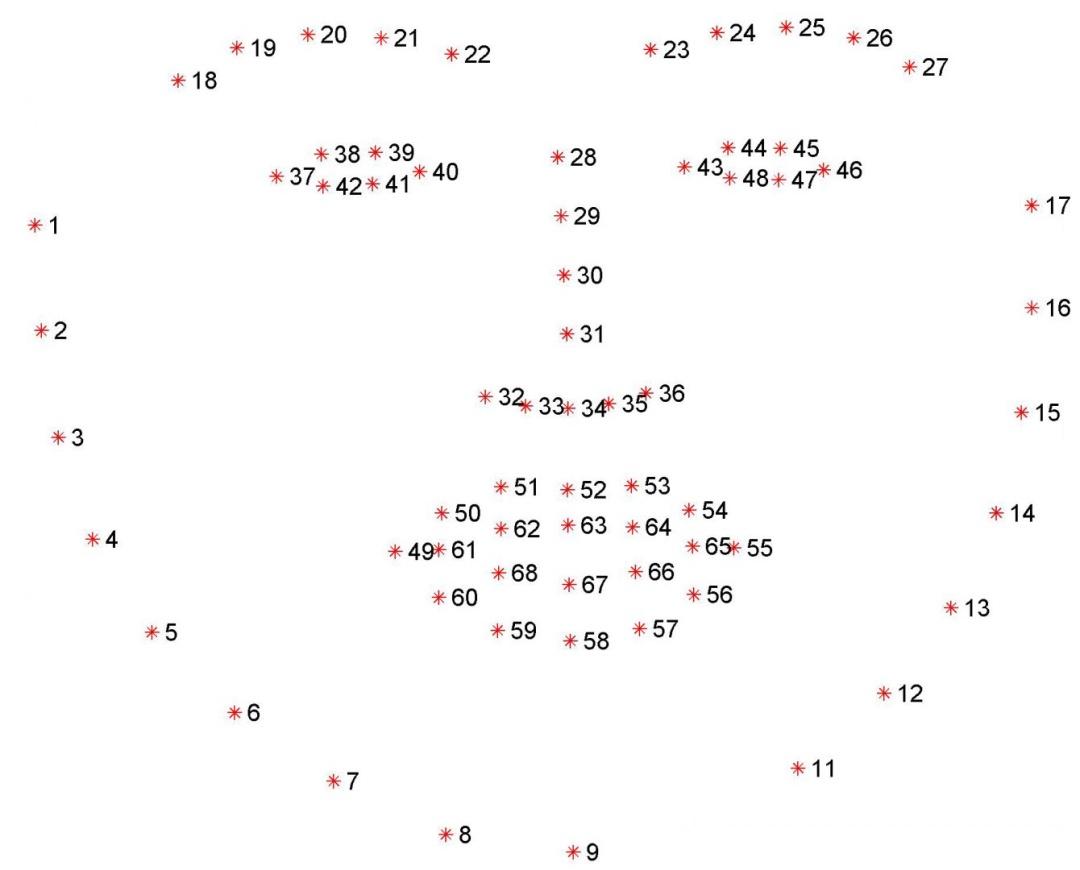

- There is a face feature point model shape_predictor_68_face_landmarks.dat in the Dlib library, which records 68 feature points of the face. This model can be used to identify facial features to detect whether a face is detected.

- Facial feature points

Blink detection principle

- By calculating the human eye aspect ratio (EAR), it can be determined whether the eyes are open or closed, and thus whether to blink.

E

A

R

=

∥

p

2

?

p

6

∥

+

∥

p

3

?

p

5

∥

2

∥

p

1

?

p

4

∥

EAR=\frac{\|p2-p6\| + \|p3-p5\|}{ 2\|p1-p4\|}

EAR=2∥p1?p4∥∥p2?p6∥ + ∥p3?p5∥?

- The numerator calculates the distance between the feature points of the eye in the vertical direction, and the denominator calculates the distance between the feature points of the eye in the horizontal direction. Since there is only one set of horizontal feature points, multiply by 2 to ensure that the weights of the two sets of feature points are the same. .

- When the eyes are closed, the distances between p2 and p6, p3 and p5 will decrease, while the distance between p1 and p4 will remain basically unchanged. Therefore, by calculating the human eye aspect ratio EAR, the open and closed states of the eyes can be determined.

Principle of mouth opening detection

- The principle of mouth opening detection is the same as blink detection. There are many feature points in the mouth. You can select six feature points by yourself to calculate the aspect ratio MAR of the mouth.

- The six feature points I selected here are 49, 55, 51, 59, 53, 57

- Following the same method, calculate the human mouth aspect ratio MAR

Shaking head detection

- When shaking the head, the distance from the center point of the nose to the left and right cheeks will change. We can determine whether the head is shaking by calculating the distance change.

- For example, by calculating the distance change from the nose center point feature point 31 to the left cheek feature point 2 and the right cheek feature point 16, the shaking motion can be determined. Please refer to the source code for details.

Dlib and OpenCV library compilation

- Visual Studio 2015 and CMake need to be installed before compilation.

- Dlib library compilation

- Dlib library download address. You can choose this package to download dlib-19.24.zip, and you need to download the face key point detector shape_predictor_68_face_landmarks.dat.

- After downloading the source code, it needs to be compiled.

- First add the following sentence at the end of the dlib/config.h file under the Dlib source code project

-

#define DLIB__CMAKE_GENERATED_A_CONFIG_H_FILE

-

- Then create the build_x86 folder under the Dlib source code project, enter the folder, and execute the command

-

cmake -G "Visual Studio 14 2015" .. cmake --build ./ --config Release

-

- After compilation is completed, the static library file dlib19.24.0_release_32bit_msvc1900.lib will be generated under build_x86\dlib\Release.

- OpenCV library compilation

- openCV library download address. Select a version of the source code (Sources) to download. I downloaded 4.5.0

- After downloading the source code, it needs to be compiled. Under the OpenCV source code project, create the build_x86 folder, enter the folder, and execute the command

-

cmake -G "Visual Studio 14 2015" .. cmake --build ./ --config Release

-

- After compilation is completed, static library files will be generated under build_x86/lib/Release, and dynamic library files will be generated under build_x86/bin/Release.

Create project

- First create a facec directory

- Create a dlib-19.24 directory in the facec directory, and copy the dlib directory in the dlib source code to this directory.

- Create an opencv-4.5.0 directory in the facec directory, and copy the include and modules directories in the opecv source code to this directory.

- Create a lib directory in the facec directory, and copy the compiled dlib static library and opencv static library to this directory.

- Create a src directory in the facec directory. The src directory contains the main.cpp source code.

- Create a CMakeLists.txt file in the facec directory.

- The directory structure is as follows

-

facec ├── dlib-19.24 │ └── dlib ├── opencv-4.5.0 │ ├── include │ └── modules ├──lib ├── src │ └── main.cpp └──CMakeLists.txt

Compile project

- Create the build_x86 directory in the facec directory and enter the directory to execute

-

cmake -G "Visual Studio 14 2015" .. cmake --build ./ --config Release

- An executable program will be generated under bin\Release, and then copy the opencv related dynamic library and face key point detector shape_predictor_68_face_landmarks.dat, and double-click the executable program to run it.

Related source code

- main.cpp source code

-

#include <dlib/opencv.h> #include <dlib/image_processing/frontal_face_detector.h> #include <dlib/image_processing/render_face_detections.h> #include <dlib/image_processing.h> #include <dlib/gui_widgets.h> #include <stdio.h> #include <opencv2/opencv.hpp> using namespace dlib; using namespace std; int main() {<!-- --> try {<!-- --> //Open camera cv::VideoCapture cap(0, cv::CAP_DSHOW); if (!cap.isOpened()) {<!-- --> printf("open VideoCapture failed.\ "); return 1; } printf("open VideoCapture success.\ "); image_window win; // Load face detection and pose estimation models. frontal_face_detector detector = get_frontal_face_detector(); shape_predictor pose_model; deserialize("shape_predictor_68_face_landmarks.dat") >> pose_model; static int mouthIndex = 0; static int leyeIndex = 0; static int reyeIndex = 0; int counter_mouth = 0; int counter_leye = 0; int counter_reye = 0; // Grab and process frames until the main window is closed by the user. while(!win.is_closed()) {<!-- --> //Grab a frame cv::Mat frame; if (!cap.read(frame)) {<!-- --> break; } cv_image<bgr_pixel> cimg(frame); \t\t\t// save Picture cv::Mat imgPng = dlib::toMat(cimg); cv::imwrite("face.png", imgPng); //Detect faces std::vector<rectangle> faces = detector(cimg); // Find the pose of each face. std::vector<full_object_detection> shapes; printf("faces number is %d\ ", faces.size()); for (unsigned long i = 0; i < faces.size(); + + i) {<!-- --> shapes.push_back(pose_model(cimg, faces[i])); //Calculate the human eye aspect ratio //left eye dlib::point leyeLeft = shapes.at(i).part(37); dlib::point leyeRight = shapes.at(i).part(40); dlib::point leyeLeftUp = shapes.at(i).part(38); dlib::point leyeLeftDowm = shapes.at(i).part(42); \t\t dlib::point leyeRightUp = shapes.at(i).part(39); dlib::point leyeRightDowm = shapes.at(i).part(41); float leyeA = sqrt(pow(leyeLeftUp.x() - leyeLeftDowm.x(), 2) + pow(leyeLeftUp.y() - leyeLeftDowm.y(), 2)); float leyeB = sqrt(pow(leyeRightUp.x() - leyeRightDowm.x(), 2) + pow(leyeRightUp.y() - leyeRightDowm.y(), 2)); float leyeC = sqrt(pow(leyeLeft.x() - leyeRight.x(), 2) + pow(leyeLeft.y() - leyeRight.y(), 2)); float leyeEVR = (leyeA + leyeB) / (2 * leyeC); //right eye dlib::point reyeLeft = shapes.at(i).part(43); dlib::point reyeRight = shapes.at(i).part(46); dlib::point reyeLeftUp = shapes.at(i).part(44); dlib::point reyeLeftDowm = shapes.at(i).part(48); dlib::point reyeRightUp = shapes.at(i).part(45); dlib::point reyeRightDowm = shapes.at(i).part(47); float reyeA = sqrt(pow(reyeLeftUp.x() - reyeLeftDowm.x(), 2) + pow(reyeLeftUp.y() - reyeLeftDowm.y(), 2)); float reyeB = sqrt(pow(reyeRightUp.x() - reyeRightDowm.x(), 2) + pow(reyeRightUp.y() - reyeRightDowm.y(), 2)); float reyeC = sqrt(pow(reyeLeft.x() - reyeRight.x(), 2) + pow(reyeLeft.y() - reyeRight.y(), 2)); float reyeEVR = (reyeA + reyeB) / (2 * reyeC); //Calculate the aspect ratio of the human mouth dlib::point mouth_left = shapes.at(i).part(49); dlib::point mouth_right = shapes.at(i).part(55); dlib::point mouth_leftUp = shapes.at(i).part(51); dlib::point mouth_leftDown = shapes.at(i).part(59); dlib::point mouth_rightUp = shapes.at(i).part(53); dlib::point mouth_rightDown = shapes.at(i).part(57); float mouthA = sqrt(pow(mouth_leftUp.x() - mouth_leftDown.x(), 2) + pow(mouth_leftUp.y() - mouth_leftDown.y(), 2)); float mouthB = sqrt(pow(mouth_rightUp.x() - mouth_rightDown.x(), 2) + pow(mouth_rightUp.y() - mouth_rightDown.y(), 2)); float mouthC = sqrt(pow(mouth_left.x() - mouth_right.x(), 2) + pow(mouth_left.y() - mouth_right.y(), 2)); float mouthEVR = (mouthA + mouthB) / (2 * mouthC); //Shake your head //Left face edge dlib::point face_left = shapes.at(i).part(2); //right face edge dlib::point face_right = shapes.at(i).part(16); //nose center dlib::point face_nose = shapes.at(i).part(31); //Distance from nose to left face edge float lfaceLength = sqrt(pow(face_nose.x() - face_left.x(), 2) + pow(face_nose.y() - face_left.y(), 2)); //The distance from the nose to the edge of the right face float rfaceLength = sqrt(pow(face_nose.x() - face_right.x(), 2) + pow(face_nose.y() - face_right.y(), 2)); \t\t\t\t //Record the number of mouth openings if (mouthEVR < 0.62){<!-- --> \t\t\t\t\t//Shut up counter_mouth + = 1; }else if(mouthEVR > 0.70){<!-- --> //Open your mouth if (counter_mouth >= 1) {<!-- --> mouthIndex + = 1; } counter_mouth = 0; } else {<!-- --> //This interval is in the critical area, unstable, and no detection is performed. } // Display the number of mouth openings char mouthBuf[100] = {<!-- --> 0 }; sprintf(mouthBuf, "mouth couent : %d", mouthIndex); cv::putText(frame, mouthBuf, cv::Point(0, 20), cv::FONT_HERSHEY_SIMPLEX, 1, cv::Scalar(0, 0, 255), 2); //Record the number of blinks of the left eye if (leyeEVR > 2.9) {<!-- --> //Close eyes counter_leye + = 1; } else {<!-- --> //open eyes if (counter_leye >= 1) {<!-- --> leyeIndex + = 1; } counter_leye = 0; } //Record the number of blinks of the right eye if (reyeEVR > 5.0) {<!-- --> //Close eyes counter_reye + = 1; } else {<!-- --> //open eyes if (counter_reye >= 1) {<!-- --> reyeIndex + = 1; } counter_reye = 0; } //Get the minimum value if (reyeIndex > leyeIndex) {<!-- --> reyeIndex = leyeIndex; } //Display the number of blinks char eyeBuf[100] = {<!-- --> 0 }; sprintf(eyeBuf, "eye count: %d", reyeIndex); cv::putText(frame, eyeBuf, cv::Point(0, 45), cv::FONT_HERSHEY_SIMPLEX, 1, cv::Scalar(0, 0, 255), 2); } \t\t\t // Display it all on the screen win.clear_overlay(); win.set_image(cimg); win.add_overlay(render_face_detections(shapes)); } } catch(serialization_error & e) {<!-- --> cout << endl << e.what() << endl; } catch(exception & e) {<!-- --> cout << e.what() << endl; } } - CMakeLists.txt file contents

-

cmake_minimum_required (VERSION 3.5) project(faceRecongize) MESSAGE(STATUS "PROJECT_SOURCE_DIR " ${PROJECT_SOURCE_DIR}) SET(SRC_LISTS ${PROJECT_SOURCE_DIR}/src/main.cpp) set(EXECUTABLE_OUTPUT_PATH ${PROJECT_SOURCE_DIR}/bin) #Configure header file directory include_directories(${PROJECT_SOURCE_DIR}/dlib-19.24) include_directories(${PROJECT_SOURCE_DIR}/opencv-4.5.0/include) include_directories(${PROJECT_SOURCE_DIR}/opencv-4.5.0/modules/core/include) include_directories(${PROJECT_SOURCE_DIR}/opencv-4.5.0/modules/calib3d/include) include_directories(${PROJECT_SOURCE_DIR}/opencv-4.5.0/modules/features2d/include) include_directories(${PROJECT_SOURCE_DIR}/opencv-4.5.0/modules/flann/include) include_directories(${PROJECT_SOURCE_DIR}/opencv-4.5.0/modules/dnn/include) include_directories(${PROJECT_SOURCE_DIR}/opencv-4.5.0/modules/highgui/include) include_directories(${PROJECT_SOURCE_DIR}/opencv-4.5.0/modules/imgcodecs/include) include_directories(${PROJECT_SOURCE_DIR}/opencv-4.5.0/modules/videoio/include) include_directories(${PROJECT_SOURCE_DIR}/opencv-4.5.0/modules/imgproc/include) include_directories(${PROJECT_SOURCE_DIR}/opencv-4.5.0/modules/ml/include) include_directories(${PROJECT_SOURCE_DIR}/opencv-4.5.0/modules/objdetect/include) include_directories(${PROJECT_SOURCE_DIR}/opencv-4.5.0/modules/photo/include) include_directories(${PROJECT_SOURCE_DIR}/opencv-4.5.0/modules/stitching/include) include_directories(${PROJECT_SOURCE_DIR}/opencv-4.5.0/modules/video/include) # Set not to display the command box if(MSVC) #set(CMAKE_EXE_LINKER_FLAGS "${CMAKE_EXE_LINKER_FLAGS} /SUBSYSTEM:WINDOWS /ENTRY:mainCRTStartup") endif() #Add library files set(PRO_OPENCV_LIB ${PROJECT_SOURCE_DIR}/lib/opencv_video450.lib ${PROJECT_SOURCE_DIR}/lib/opencv_core450.lib ${PROJECT_SOURCE_DIR}/lib/opencv_videoio450.lib ${PROJECT_SOURCE_DIR}/lib/opencv_calib3d450.lib ${PROJECT_SOURCE_DIR}/lib/opencv_dnn450.lib ${PROJECT_SOURCE_DIR}/lib/opencv_features2d450.lib ${PROJECT_SOURCE_DIR}/lib/opencv_flann450.lib ${PROJECT_SOURCE_DIR}/lib/opencv_highgui450.lib ${PROJECT_SOURCE_DIR}/lib/opencv_gapi450.lib ${PROJECT_SOURCE_DIR}/lib/opencv_imgcodecs450.lib ${PROJECT_SOURCE_DIR}/lib/opencv_imgproc450.lib ) set(PRO_DLIB_LIB ${PROJECT_SOURCE_DIR}/lib/dlib19.24.0_release_32bit_msvc1900.lib) # Generate executable program ADD_EXECUTABLE(faceRecongize ${SRC_LISTS}) # Link library file TARGET_LINK_LIBRARIES(faceRecongize ${PRO_OPENCV_LIB} ${PRO_DLIB_LIB})