code show as below:

import requests # Essential for crawlers

import time # Limit crawler speed

import os #Create a new specified storage folder

import pandas as pd #Read EXCEL file

import re #re is the regular expression module

import time

importsys

from tqdm import tqdm

import json

import requests

import openpyxl

from openpyxl import Workbook

from openpyxl.drawing.image import Image

from openpyxl import load_workbook

#Set progress bar

n= 0

pbar = tqdm(total=100)

# Custom directory to store log files

log_path = os.getcwd() + "/FailLogs/"

if not os.path.exists(log_path):

os.makedirs(log_path)

def filterHtmlTag(htmlstr):

'''

Filter tags in html

'''

#Compatible with line breaks

s = htmlstr.replace('\r\\

','\\

')

s = htmlstr.replace('\r','\\

')

s = htmlstr.replace('','')

#rule

re_cdata = re.compile('//<!\[CDATA\[[^>]*//\]\]>',re.I) #Match CDATA

re_script = re.compile('<\s*script[^>]*>[\S\s]*?<\s*/\s*script\s*>',re .I)#script

re_style = re.compile('<\s*style[^>]*>[\S\s]*?<\s*/\s*style\s*>',re .I)#style

re_br = re.compile('<br\s*?\/>',re.I)#br label newline

re_p = re.compile('<\/p>',re.I)#p label newline

re_h = re.compile('<[\!|/]?\w + [^>]*>',re.I)#HTML tag

re_comment = re.compile('<!--[^>]*-->')#HTML comment

re_hendstr = re.compile('^\s*|\s*$')#Heading and trailing blank characters

re_lineblank = re.compile('[\t\f\v ]*')#Blank character

re_linenum = re.compile('\\

+ ')#Reserve 1 consecutive line break

#deal with

s = re_cdata.sub('',s)#Go to CDATA

s = re_script.sub('',s) #Go to script

s = re_style.sub('',s)#Go to style

s = re_br.sub('\\

',s)#br label wrap

s = re_p.sub('\\

',s)#p label wrap

s = re_h.sub('',s) #Remove HTML tags

s = re_comment.sub('',s)#Remove HTML comments

s = re_lineblank.sub('',s)#Remove blank characters

s = re_linenum.sub('\\

',s)#Keep 1 continuous line break

s = re_hendstr.sub('',s)#Remove leading and trailing blank characters

#replace entity

s = replaceCharEntity(s)

return s

def replaceCharEntity(htmlStr):

CHAR_ENTITIES={'nbsp':' ','160':' ',

'lt':'<','60':'<',

'gt':'>','62':'>',

'amp':' & amp;','38':' & amp;',

'quot':'"','34':'"',}

re_charEntity=re.compile(r' & amp;#?(?P<name>\w + );')

sz=re_charEntity.search(htmlStr)

while sz:

entity=sz.group()#entity full name, such as>

key=sz.group('name') #Remove the characters after & amp;; such as (" "--->key = "nbsp") Remove the entity after & amp;;, such as > gt

try:

htmlStr= re_charEntity.sub(CHAR_ENTITIES[key],htmlStr,1)

sz=re_charEntity.search(htmlStr)

exceptKeyError:

#Replace with empty string

htmlStr=re_charEntity.sub('',htmlStr,1)

sz=re_charEntity.search(htmlStr)

returnhtmlStr

#Extract Chinese

def chinese(words):

chinese =''.join(re.findall('[\\一-\\龥]', words))

return chinese

#Baidu search results obtained 10

def get_img_url(keyword):

"""Send a request to get the data in the interface"""

#Interface link

url = 'https://image.baidu.com/search/acjson?'

# Request header to simulate browser

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.69 Safari/537.36'}

# Construct the params form of the web page

params = {

'tn': 'resultjson_com',

'logid': '6918515619491695441',

'ipn': 'rj',

'ct': '201326592',

'is': '',

'fp': 'result',

'queryWord': f'{keyword}',

'word': f'{keyword}',

'cl': '2',

'lm': '-1',

'ie': 'utf-8',

'oe': 'utf-8',

'adpicid': '',

'st': '-1',

'z': '',

'ic': '',

'hd': '',

'latest': '',

'copyright': '',

's': '',

'se': '',

'tab': '',

'width': '',

'height': '',

'face': '0',

'istype': '2',

'qc': '',

'nc': '1',

'fr': '',

'expermode': '',

'force': '',

'cg': 'girl',

'pn': 1,

'rn': '10',

'gsm': '1e',

}

# List used to store image links

img_url_list = []

try:

# Send request with request headers and params expression

response = requests.get(url=url, headers=headers, params=params)

# Set encoding format

response.encoding = 'utf-8'

pattern=filterHtmlTag(response.text)

json_dict = json.loads(pattern)

# Position one layer above 10 pictures

data_list = json_dict['data']

# Delete the last null value in the list

del data_list[-1]

for i in data_list:

img_url = i['thumbURL']

img_url_list.append(img_url)

return img_url_list

except Exception as e:

with open(os.getcwd() + "/FailLogs/" + keyword.lstrip() + ".txt", "w") as txt_file:

txt_file.write(pattern + "The reason why it cannot be compiled========>" + repr(e))

return img_url_list

#Download pictures to local

def get_down_img(img_url_list,dirs):

# Generate a folder to store images under the current path

if not os.path.exists(os.getcwd() + "/" + dirs):

os.makedirs(dirs)

# Define image number

n = 0

for img_url in img_url_list:

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.69 Safari/537.36'}

# Splice pictures to store addresses and names

img_path = os.getcwd() + "/" + dirs + "/" + str(n) + '.jpg'

# Download image

try:

res = requests.get(url=img_url, headers=headers)

if 200 == res.status_code:

with open(img_path, 'wb') as file:

file.write(res.content)

except Exception as e:

print(f'Failed to download file: {file_path}')

print(repr(e))

# Increment the picture number

n = n + 1

if __name__ == '__main__':

pbar = tqdm(total=100)

#Extract excel

df1=pd.read_excel('Hi Purchase Maintenance Main Picture.xlsx',sheet_name='Sheet1')#Read the sheet page

list1=[]#Product name

for j in df1['Product name (required)']:

list1.append(str(j))

#removeduplication

list2 =list1 #list(set(list1)) No need to remove duplicates, index according to row content

print("There are total" + str(len(list2)) + "entries")

#Open Excel file

wb = openpyxl.load_workbook('Hibuy warehouse maintenance main picture.xlsx')

# Activate the current worksheet

ws = wb.active

# 1. Loop keywords

for i in range(len(list2)):

keyword = list2[i].lstrip()

# 2. Get the image link of the specified keyword

img_url_list = get_img_url(keyword)

if len(img_url_list)==0:

print("Keyword:" + keyword + "Download failed!")

else:

#Download the image to the specified location

get_down_img(img_url_list,keyword)

#Create Image object

img = Image(os.getcwd() + "/" + keyword + "/" + '0.jpg' )

# Adjust image size

img.width = 150

img.height = 100

#The row index where the current row data is located

row_index=df1[df1['Product name (required)'].isin([list2[i]])].index.tolist()[0]

# print("Keyword:" + keyword + "The index row where "is:" + str(row_index))

# Insert the picture into the specified cell

ws.add_image(img, 'G' + str(row_index + 2))

ws.row_dimensions[i + 2].height= 100

ws.column_dimensions['G'].width =50

if i % 8== 0:

pbar.update(1)

pbar.close()

wb.save("Hi Purchase Maintenance Main Picture_Picture Version.xlsx")

print("All entries downloaded!")

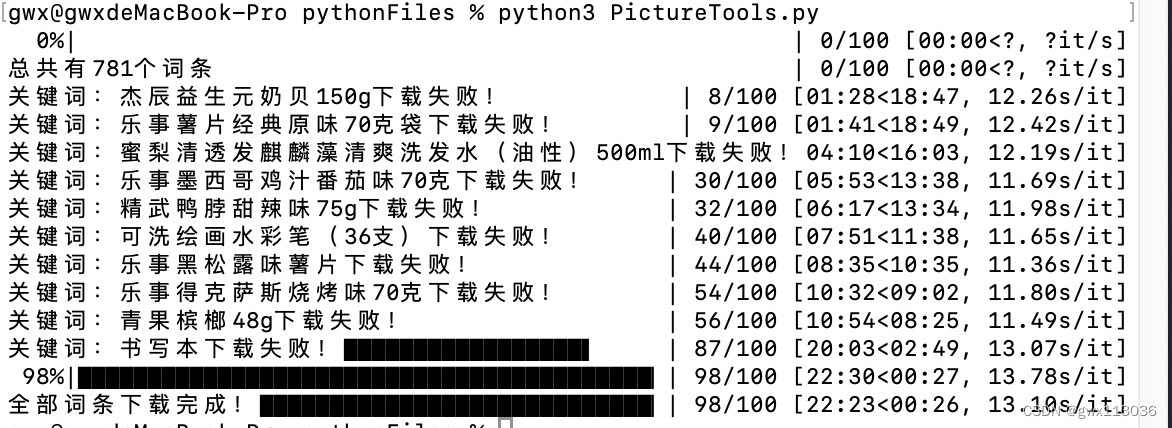

operation result:

The knowledge points of the article match the official knowledge archives, and you can further learn relevant knowledge. Python introductory skill treeStructured data analysis tool PandasPandas overview 383,407 people are learning the system